Congratulations to several Galileo K-12 Online users for their recent accolades – having schools within their districts awarded the Colorado Governor’s Distinguished Improvement Award for exceptional student growth.

“On the school performance framework that is used by the state to evaluate schools, these schools ‘exceed’ expectations on the indicator related to longitudinal academic growth and ‘meet or exceed' expectations on the indicator related to academic growth gaps,” said the Colorado Department of Education website.

ATI is proud to be the partner of so many schools across the nation who have worked to raise student achievement and we are pleased to see these schools being recognized for their accomplishments.

Tuesday, December 27, 2011

Monday, December 19, 2011

A Time Saver for K-2 Teachers: The Early Benchmark Literacy Assessment Series

As an experienced kindergarten teacher, searching for ways to maximize instructional teaching time with my students was one of my foremost goals. My students were formally tested three times a year and this was a very time consuming task as each child needed to be tested individually. With budget cuts, paraprofessional assistance was not offered, and that left the assessing up to me, the teacher. There was also the added dilemma of how to manage 25-30 other five year olds while testing individuals, not to mention the loss of quality instructional time. During each testing period, it generally took a minimum of 10 minutes to assess each child for language arts alone. In a classroom of 25 students, this meant over 250 quality instructional minutes lost, three times a year.

The Galileo Early Literacy Benchmark Assessment Series (ELBAS) tests offers an effective way to get literacy testing done in a short period of time. In a computer lab situation, the entire class can be tested on standards based objectives in 30-45 minutes. In a classroom environment, one or two students can be testing independently while the teacher continues to teach. The test responses are automatically recorded and scored online and are available to the teacher to help guide instruction. There is no loss of instructional time and the potential for frustrating interruptions and behavioral issues are no longer an issue. The ELBAS tests are offered for grades K-2 and inform teachers if their students are on track to pass state testing benchmarks by the end of grade 3. The ELBAS tests are a positive experience for students and teachers alike, help streamline assessment, and offer a wonderful time-saving solution for teachers during assessment periods. In addition, ATI can analyze the assessments and provide Developmental Levels to show growth over the course of the year.

Click here to see more information about the early literacy assessments.

-Laura Babcock, Educational Management Services Coordinator

Monday, December 12, 2011

Testing Activity Report

Galileo K-12 Online includes a number of useful administrative reports designed for such tasks as checking demographic data for errors and monitoring user activity. One of the administrative reports available in Galileo K-12 Online that may be particularly useful this time of year is the Testing Activity Report. This report can be run at the district or school level and can assist administrators in monitoring the extent to which scheduled benchmark or formative assessments have been taken.

To use the Testing Activity Report, the administrator selects a timeframe for assessments he or she wishes to monitor. A key feature is that you can select whether to display all tests administered during the selected time period, only benchmark tests, or only formative tests.

The resulting report lists all the selected assessments scheduled during the chosen time period, the number of students scheduled to take the tests, and the number who have done so.

If the Testing Activity Report is generated at the school level, another useful feature is that administrators can drill-down to get a list of classes and the percent of completion for each. With this information, administrators can identify potential problems and work with their staff toward a solution.

To use the Testing Activity Report, the administrator selects a timeframe for assessments he or she wishes to monitor. A key feature is that you can select whether to display all tests administered during the selected time period, only benchmark tests, or only formative tests.

The resulting report lists all the selected assessments scheduled during the chosen time period, the number of students scheduled to take the tests, and the number who have done so.

If the Testing Activity Report is generated at the school level, another useful feature is that administrators can drill-down to get a list of classes and the percent of completion for each. With this information, administrators can identify potential problems and work with their staff toward a solution.

Monday, December 5, 2011

Producing Significant Positive Changes in Children’s Cognitive Functioning

*

*As early as 1991, research findings from a study undertaken by John R. Bergan, founder and current President of ATI, indicated that a brief, two-month implementation of an intervention in which assessment information was gathered to guide instruction had a direct effect on the acquisition of basic math and reading skills. The acquisition of these skills had a significant effect on promotion to the first grade and on referral to and placement in special education. In the control condition, one in every 3.69 children was referred for possible placement in special education. In the intervention condition, only one out of every 17 children was referred. In the control condition, approximately one out of every five children was placed in special education. In the intervention condition, only one out of every 71 children was placed in special education. Gathering assessment information to guide instruction continues to drive the design of the Galileo K-12 Online Instructional Improvement System.

Current independent research funded by recipients of a federal grant administered by the Massachusetts Department of Elementary and Secondary Education and implemented by MAGI Services demonstrated strong evidence that the benchmark pilot project was a success. The Galileo K-12 Online assessments were shown to provide teachers with a tool to inform and shape their instruction with the goal of increased student mastery of learning standards. Read Full Paper

Other success stories can be found on our website at ati-online.com. Contact your Field Services Coordinator with your success stories. We would be privileged to share them in subsequent blogs.

*J.R. Bergan, I.E. Sladeczek, R.D. Schwarz, American Educational Research Journal (Volume 28, Number 3 - Fall 1991). pp. 683-714, © 1991 by American Educational Research Association. Reprinted by Permission of SAGE Publications.

Monday, November 28, 2011

Computerized Adaptive Testing…a better way with Galileo K-12 Online

Computerized Adaptive Testing (CAT) is a form of assessment in which administered items are selected based on the ability levels of the students. Initial item selection is typically based on the assumption that the test taker is of average ability; subsequently selected items are based on ability estimates obtained from preceding responses. This approach optimizes precision by increasing the selection of items that are neither too easy nor too difficult. CAT is efficient and quickly identifies gaps in student learning.

Even better is the fact that ATI automates the construction of computerized adaptive tests. Tests are generated by ATI from an Assessment Planner that defines the item pool to be used in selecting items for the adaptive assessment. Automated construction provides ATI the capability to generate customized computerized adaptive tests to meet unique district needs.

The Galileo Computerized Adaptive Testing Pilot is an integral part of ATI’s next generation comprehensive assessment system and is completely integrated into ATI’s Galileo K-12 Online Instructional Improvement System. Adaptive assessments serve many purposes including guiding instruction, monitoring progress, screening, and assisting in placement decisions.

Integration within Galileo ensures that teaching staff and administrators have easy and rapid access to the full range of innovative assessment, reporting, curriculum, and measurement tools provided all within one system. ATI’s comprehensive assessment system is part of our ongoing commitment to continuous innovation so that school districts and charter schools can continuously build their own capacity to lead change in ways that enhance the quality and impact of education on our nation’s children and youth.

ATI's approach to Computerized Adaptive Testing is discussed in greater depth in Composition of a Comprehensive Assessment System.

Experience Galileo during an online overview and see how it provides a better way to address the goal of raising student achievement. You can visit the Assessment Technology Incorporated website (ati-online.com), participate in an online overview by registering either through the website or by calling 1.877.442.5453 to speak with a Field Services Coordinator, or visit us at the

• Colorado Association of School Boards (CASB) 71st Annual Conference December 8 through 11 at the Broadmoor Hotel, Colorado Springs, Colorado.

• Arizona School Boards Association (ASBA)/ Arizona School Administrators (ASA) 54th Annual Conference December 15 through 16 at the Biltmore Conference Center, Phoenix, Arizona.

Even better is the fact that ATI automates the construction of computerized adaptive tests. Tests are generated by ATI from an Assessment Planner that defines the item pool to be used in selecting items for the adaptive assessment. Automated construction provides ATI the capability to generate customized computerized adaptive tests to meet unique district needs.

The Galileo Computerized Adaptive Testing Pilot is an integral part of ATI’s next generation comprehensive assessment system and is completely integrated into ATI’s Galileo K-12 Online Instructional Improvement System. Adaptive assessments serve many purposes including guiding instruction, monitoring progress, screening, and assisting in placement decisions.

Integration within Galileo ensures that teaching staff and administrators have easy and rapid access to the full range of innovative assessment, reporting, curriculum, and measurement tools provided all within one system. ATI’s comprehensive assessment system is part of our ongoing commitment to continuous innovation so that school districts and charter schools can continuously build their own capacity to lead change in ways that enhance the quality and impact of education on our nation’s children and youth.

ATI's approach to Computerized Adaptive Testing is discussed in greater depth in Composition of a Comprehensive Assessment System.

Experience Galileo during an online overview and see how it provides a better way to address the goal of raising student achievement. You can visit the Assessment Technology Incorporated website (ati-online.com), participate in an online overview by registering either through the website or by calling 1.877.442.5453 to speak with a Field Services Coordinator, or visit us at the

• Colorado Association of School Boards (CASB) 71st Annual Conference December 8 through 11 at the Broadmoor Hotel, Colorado Springs, Colorado.

• Arizona School Boards Association (ASBA)/ Arizona School Administrators (ASA) 54th Annual Conference December 15 through 16 at the Biltmore Conference Center, Phoenix, Arizona.

Monday, November 21, 2011

Data Access Protection By Cloud-Based Service Providers

In an earlier post, I discussed some key benefits of cloud-based services, or Software as a Service (SaaS). While the benefits are significant, there are risks that must be considered when making decisions about SaaS.

To approach these risks and some of the questions prospective users of cloud-based services might ask, it is easiest to break the risks down into categories. In today’s post, I’ll take a look at two key questions potential SaaS users might have about data access control and protection:

- How can data be kept safe from unauthorized access? Whether a cloud-based service is used for student information systems (SIS), instructional improvement systems (IIS), data warehousing or more than one, ensuring the protection of sensitive student data is taken seriously by educators and the vendors providing these services. Effective implementation of security measures in the following areas provides protection for externally-stored student data on par with the security achieved by housing data on internal servers:

- Security Hardware: Security hardware includes firewalls that filter network traffic to (and from) application and data servers and inspect each packet for a threat, dropping traffic when a threat is present. Another effective type of security hardware is load balancers or cache engines that offload direct access to application servers and prevent operating system exploits.

- Security Software: The baseline of any effective secure software environment includes up-to-date operating systems on all application and data servers. Microsoft revealed in the first half of 2011, less than one percent of the exploits discovered took advantage of a “zero day vulnerability” (one that had not yet been patched by the vendor). This means over 99 percent of the attacks were preventable merely by maintaining well-patched operating systems and software. Also falling in the category of security software is the antivirus and computer security software suite. Finally, effective use of change management software can help SaaS Information Technology (IT) staff informed about any attempts to alter the application server environment without their knowledge.

- Secure Network Architecture: A well-designed network for any application-hosting environment includes segregation of application and data servers. This can be achieved by physically segmenting traffic or using a combination of segmentation and security hardware.

- Data Encryption: Encrypting the transport of data from the service vendor to the client system is critical. The current best practice for Secure Sockets Layer (SSL), Transport Level Security (TLS) is to employ a minimum of 128-bit encryption.

- Physical Security: An easily overlooked security concern is the physical environment of the service provider. Are application and data servers housed within a secure environment, and is access to the environment regulated by the vendor? Physical controls include personnel monitoring, multi-factor access authentication, external monitoring and other types of surveillance.

- How can SaaS vendors prevent authorized users from accessing data they shouldn’t? It’s an easy problem to develop. Energy is focused on keeping the bad guys out, but once credentials are provided to trusted users, controls over proper access are overlooked. Even with proper physical and network controls, IT personnel are often leery of relinquishing data access control to an external system. This is because the outsourced services often effectively bypass the security controls implemented internally, at least to some degree. Two primary types of external security models may be implemented:

- Hierarchical - Such as the user account structure employed by Galileo Online IIS. Users are allowed access to increasing levels of information dependent upon the scope of the user account.

- Access-based - A flatter model such as that employed by Google Documents. Discrete permissions are assigned to individual data objects on a per-user, or per-group, basis.

User privileges can be managed effectively and in some cases there is even benefit to a separate permissions structure because individual changes can be managed by the users of the system instead of having to rely on technical staff to implement changes.

Do you have other concerns about data access protection that haven’t been covered? In a future post I’ll consider another risk area presented by use of cloud-based services and address how vendors are overcoming those challenges to create safe, effective environments for their clients.

Monday, November 14, 2011

A powerful and often overlooked tool....

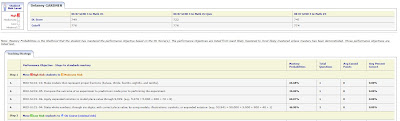

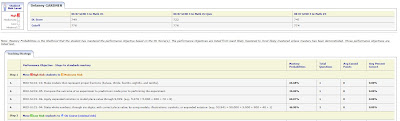

Nested within the Aggregate Multi-Test Report and the Benchmark Results Report is one of Galileo’s most powerful tools for intervention: The Benchmark Profile.

The goal of the Benchmark Profile is to provide a detailed plan for moving an individual student or entire risk group to the next level of risk assessment.

There are two versions of the Benchmark Profile:

• Group Benchmark Profile

• Student Benchmark Profile

Features:

• Each standard will appear along with the probability that the student has mastered that standard, and their average score on questions covering that standard (Student Benchmark Profile only).

• Every student’s Developmental Level Score (or average for the group) as well as the cut score for each benchmark is displayed at the top of the profile.

• Profiles will continuously update after each assessment to give you a current view of student progress.

• Links to schedule Assignments and Quizzes directly from the report (Group Benchmark Profile only).

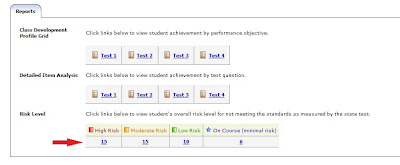

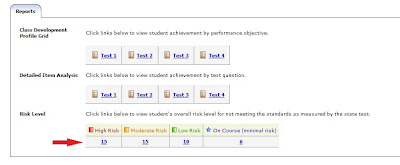

The Group Benchmark Profile can be accessed by going through the Benchmark Results Report:

• From the Reports Tab → Test Sets → Benchmark Results

• From the Dashboard → Benchmark Result (Under Recent Events)

From the Benchmark Results page you can simply select which risk category you would like to see a detailed plan for:

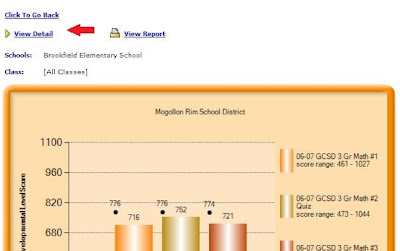

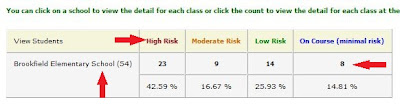

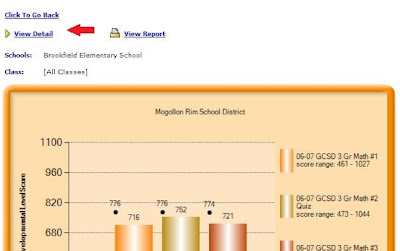

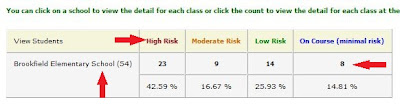

The Student Benchmark Profile can be accessed from within the Aggregate-Multi Test Report:

• From the Reports Tab → Multi Test → Aggregate Multi-Test

• Next Select the “View Detail Link”

• Despite the fact that it may not look like it, everything on the next page will be linked. You can select the School/Class or Risk Category to see a list of students contained in your selection.

• By selecting a student you will bring up that individual’s customized benchmark profile.

-Denny Armstrong, Field Services Coordinator

The goal of the Benchmark Profile is to provide a detailed plan for moving an individual student or entire risk group to the next level of risk assessment.

There are two versions of the Benchmark Profile:

• Group Benchmark Profile

• Student Benchmark Profile

Features:

• Each standard will appear along with the probability that the student has mastered that standard, and their average score on questions covering that standard (Student Benchmark Profile only).

• Every student’s Developmental Level Score (or average for the group) as well as the cut score for each benchmark is displayed at the top of the profile.

• Profiles will continuously update after each assessment to give you a current view of student progress.

• Links to schedule Assignments and Quizzes directly from the report (Group Benchmark Profile only).

The Group Benchmark Profile can be accessed by going through the Benchmark Results Report:

• From the Reports Tab → Test Sets → Benchmark Results

• From the Dashboard → Benchmark Result (Under Recent Events)

From the Benchmark Results page you can simply select which risk category you would like to see a detailed plan for:

The Student Benchmark Profile can be accessed from within the Aggregate-Multi Test Report:

• From the Reports Tab → Multi Test → Aggregate Multi-Test

• Next Select the “View Detail Link”

• Despite the fact that it may not look like it, everything on the next page will be linked. You can select the School/Class or Risk Category to see a list of students contained in your selection.

• By selecting a student you will bring up that individual’s customized benchmark profile.

-Denny Armstrong, Field Services Coordinator

Tuesday, November 8, 2011

Supporting National Head Start Association Accomplishments

As a long-time supporter of the National Head Start Association (NHSA), we would like to share a portion of a recent email from the Association that provided information on current media attention regarding their achievements.

The following are a selection of links to articles provided in the Head Start email.

Occupy the Classroom - Nicholas Kristof for the New York Times

From Kindergarten to College Completion - Judith Scott-Clayton for the New York Times’ Economix Blog

It Takes a Village - Charles M. Blow for the New York Times

ATI supports NHSA in several ways. One is the development of the Galileo Pre-K Online Portrait of Child Outcomes. This is a broad array of Head Start programs representing several states that have joined together for several years to create and share important stories about themselves and about children's accomplishments. The Portrait of Child Outcomes has been used by NHSA in making presentations nationwide documenting the contributions of Head Start to early childhood learning. We are privileged to have the opportunity to bring their story to you, click here.

We also support Head Start by continuously enhancing the tools offered to meet the changing needs of the program . For example, we have recently designed our new Galileo G3 Assessment Scales to align with the 2011 Head Start Child Development and Early Learning Framework, representing the developmental building blocks that are most important for a child’s school and long-term success. To learn more, click here.

Right now, there is a growing realization among business leaders and policy makers that investment in early childhood is key to the future success of our nation. There is also a greater understanding of the importance in the development of character of the first five years of life and a greater appreciation of the long term benefits to society from investing in high quality programs such as Head Start and Early Head Start. In recent weeks, national media coverage has made mention of Head Start repeatedly. We have always seen the value of the work we do, but it’s exciting to see others sit up and take note! We wanted to share some stories with you that you may enjoy and that may be useful to you in educating others in your community about Head Start.

The following are a selection of links to articles provided in the Head Start email.

Occupy the Classroom - Nicholas Kristof for the New York Times

From Kindergarten to College Completion - Judith Scott-Clayton for the New York Times’ Economix Blog

It Takes a Village - Charles M. Blow for the New York Times

ATI supports NHSA in several ways. One is the development of the Galileo Pre-K Online Portrait of Child Outcomes. This is a broad array of Head Start programs representing several states that have joined together for several years to create and share important stories about themselves and about children's accomplishments. The Portrait of Child Outcomes has been used by NHSA in making presentations nationwide documenting the contributions of Head Start to early childhood learning. We are privileged to have the opportunity to bring their story to you, click here.

We also support Head Start by continuously enhancing the tools offered to meet the changing needs of the program . For example, we have recently designed our new Galileo G3 Assessment Scales to align with the 2011 Head Start Child Development and Early Learning Framework, representing the developmental building blocks that are most important for a child’s school and long-term success. To learn more, click here.

Monday, October 31, 2011

Are you Searching for A Better Way to Raise Student Achievement?

As you look for a technology solution for educational management, we encourage you to experience Galileo K-12 Online. Galileo K-12 Online from Assessment Technology Incorporated is a fully integrated, research-based instructional improvement system, providing next generation comprehensive assessment and instructional tools. State standards are built in and ready for use, as are Common Core State Standards. Galileo K-12 Online management tools assist educators in establishing instructional goals reflecting the district’s curriculum, assessing goal attainment, forecasting standards mastery on statewide tests, and using assessment information to guide classroom instruction, enrichment, and reteaching interventions. ATI’s patented technology is uniquely qualified to address a district’s goals when implementing standards-based strategies to raise student achievement.

With ATI’s cutting-edge technology and flexible innovations, users can:

• Administer a full range of assessments using ATI’s next generation comprehensive assessment system, including benchmark, formative, screening and placement tests, plus interim and final course examinations, pretests and posttests, early literacy benchmarks, computerized adaptive tests, and instruments documenting instructional effectiveness.

• Import, schedule, deliver, administer (online or offline), automatically score and report on assessments created outside of Galileo K-12 Online using ASK Technology.

• Increase measurement precision using Computerized Adaptive Testing (CAT), providing high levels of efficiency in several types of assessment situations.

• Use Item Response Theory generated assessment information to guide classroom instruction, enrichment, and reteaching interventions.

• Evaluate instruction based on reliable and valid assessment information including continuously updated forecasts of student achievement on statewide tests.

• Access the Dashboard, designed to provide immediately available, actionable information relevant in the teacher’s role of enhancing student achievement.

Experience Galileo during an online overview and see how it is the better way to raise student achievement. You can visit the Assessment Technology Incorporated website (ati-online.com), participate in an online overview by registering either through the website or by calling 1.877.442.5453 to speak with a Field Services Coordinator, or visit us at the

• Arizona Educational Research Organization (AERO) 24th Annual Conference November 3 at the University of Phoenix, Southern Arizona Campus, Tucson, Arizona.

• Arizona Charter School Association (ACSA) 16th Annual Conference November 10 and 11 at the Westin La Paloma Resort, Tucson, Arizona.

• Illinois Association of School Boards (IASB), Illinois Association of School Administrators (IASA), and Illinois Association of School Business Officials (IASBO) Joint Annual Conference November 18 through 20 at the Hyatt Regency Chicago, Chicago, Illinois.

With ATI’s cutting-edge technology and flexible innovations, users can:

• Administer a full range of assessments using ATI’s next generation comprehensive assessment system, including benchmark, formative, screening and placement tests, plus interim and final course examinations, pretests and posttests, early literacy benchmarks, computerized adaptive tests, and instruments documenting instructional effectiveness.

• Import, schedule, deliver, administer (online or offline), automatically score and report on assessments created outside of Galileo K-12 Online using ASK Technology.

• Increase measurement precision using Computerized Adaptive Testing (CAT), providing high levels of efficiency in several types of assessment situations.

• Use Item Response Theory generated assessment information to guide classroom instruction, enrichment, and reteaching interventions.

• Evaluate instruction based on reliable and valid assessment information including continuously updated forecasts of student achievement on statewide tests.

• Access the Dashboard, designed to provide immediately available, actionable information relevant in the teacher’s role of enhancing student achievement.

Experience Galileo during an online overview and see how it is the better way to raise student achievement. You can visit the Assessment Technology Incorporated website (ati-online.com), participate in an online overview by registering either through the website or by calling 1.877.442.5453 to speak with a Field Services Coordinator, or visit us at the

• Arizona Educational Research Organization (AERO) 24th Annual Conference November 3 at the University of Phoenix, Southern Arizona Campus, Tucson, Arizona.

• Arizona Charter School Association (ACSA) 16th Annual Conference November 10 and 11 at the Westin La Paloma Resort, Tucson, Arizona.

• Illinois Association of School Boards (IASB), Illinois Association of School Administrators (IASA), and Illinois Association of School Business Officials (IASBO) Joint Annual Conference November 18 through 20 at the Hyatt Regency Chicago, Chicago, Illinois.

Thursday, October 27, 2011

English Language Arts Test Design

English tests, by their nature, require the students to do a lot of reading. When designing these tests, how much reading is reasonable? How can we best assess students’ reading comprehension abilities without creating tests that are too long and have too many texts?

The answer comes at the very beginning of the process, in assessment planning. District pacing guides are meant to ensure that all classes are being instructed and students are making progress toward mastering essential standards. In designing a benchmark assessment, districts often use their pacing guides to plan assessments, but those pacing guides, while useful in tracking progress toward state standards mastery, rarely reflect the full scope of instruction that is occurring in the classroom during a benchmark period.

Do English teachers have their students read an entire story only to teach the idea of a main character? Of course not. They teach about plot, the author’s use of language, the context and setting of the story, how it relates to the author’s life experiences, and all of the other elements of literature that compose a novel, short story, poem, or dramatic work. However, pacing guides may only emphasize one of these standards in a particular assessment period.

The pacing guide approach to assessment design, while it has the benefit of matching the district’s plans for instructing and assessing standards, has a tendency to narrow the focus of instruction so much that the assessment requires a large number of texts to measure very specific aspects of a text, and leaves students’ holistic comprehension of a text unmeasured. When the purpose of assessment is to measure student progress, this seems like an opportunity missed.

Measurement reliability is best served by having long tests, the longer the test the greater the reliability. For our purposes and the realities of class time available for assessment, we recommend 35-50 items per test.

When a pacing guide emphasizes a few core standards, it helps to clarify expectations for everyone, but when a test measures only a narrow range of standards, many more questions are required per standard. When these standards are spread across multiple genres, or focused on comparing or synthesizing texts, we start to see unintended and undesirable characteristics, namely the inclusion of too many texts on an assessment.

For example, to address reliability with a 35-50 item test, a pacing guide of five learning standards would require seven to ten items per standard. That doesn’t seem like many items to fully measure a standard, until we consider a concept like main character. How many “main” characters will a short story have? Standards like this often require a new text for each question. Eight or ten texts seem like an awful lot to read to assess whether a student understands the concept of a main character.

How about comparing and contrasting two texts? When the student is asked to compare and contrast across genres, or two different authors’ explorations of a similar theme, it’s an opportunity to see students demonstrate analytical skills and synthesis of information, higher-order thinking skills we want them to develop through reading. One or two questions that compare two texts, and up to 5 or 10 more questions that require analysis of each text in depth can better measure these higher-order or holistic skills than forcing students to read 10 different texts to answer 5 questions focused only on comparison.

Some pacing guides strive for balance, incorporating elements of fiction and nonfiction in each benchmark period. This is beneficial for students and teachers, allowing them to explore different forms of reading and writing, often in relation to each other. Complications in assessment occur when too few standards or standards without any overlap of genres are implemented on the same assessment. Measuring a single standard five to eight times on the morals of folklore and mythology with the rest of the test addressing nonfiction standards will result in a number of folklore texts with very few items per text and no possibility of overlap with the nonfiction standards.

So how do we address these concerns? Assessment Technology Incorporated has worked with a number of districts to develop a text packaging system that allows districts to still emphasize the essential standards that they want reflected in each benchmark, but to reduce the number of texts that appear on the tests. This is accomplished by including other learning standards that the teachers are instructing that may not appear on that benchmark period’s pacing guide but are an important component in measuring students’ overall reading comprehension. The package also balances the number of items per standard based on the occurrence of the skill in everyday reading. For example, the main character standard would get one item, compare and contrast maybe a couple, and elements of literature might get three or four, a reasonable distribution of the types of information students would see in a normal short work of fiction.

Another approach is to focus on one or two specific genres in an assessment rather than trying to address poetry, short story, persuasive text, informational text, and dramatic works in one assessment.

We can, by testing by genre or limiting repetition of POs that involve compare and contrast, cross-genre, cross-cultural, and single-instance items, reduce the number of texts.

The table below shows a test created without the text packaging approach for 2010-11, and the same test adjusted by the methods outlined above. Note that with text packaging the number of words that students had to read was cut nearly in half while the number of items on the two tests remained nearly the same. There are many benefits to fully utilizing texts as we are doing with the text-packaging approach: fewer texts for the students to read on the assessment, a more thorough demonstration of understanding of the text, less repetitive questioning, and by using fewer texts per test there are more texts available to choose from in later assessments.

*GO is a graphic organizer. It does not have a word count.

*GO is a graphic organizer. It does not have a word count.

**Note this is the total number of items on the test, not the sum of the column as some items have more than one text attached.

If you are interested in exploring the text packaging options available in Galileo K-12 Online, please contact your Field Service coordinator or Karyn White in Educational Management Services to learn more.

The answer comes at the very beginning of the process, in assessment planning. District pacing guides are meant to ensure that all classes are being instructed and students are making progress toward mastering essential standards. In designing a benchmark assessment, districts often use their pacing guides to plan assessments, but those pacing guides, while useful in tracking progress toward state standards mastery, rarely reflect the full scope of instruction that is occurring in the classroom during a benchmark period.

Do English teachers have their students read an entire story only to teach the idea of a main character? Of course not. They teach about plot, the author’s use of language, the context and setting of the story, how it relates to the author’s life experiences, and all of the other elements of literature that compose a novel, short story, poem, or dramatic work. However, pacing guides may only emphasize one of these standards in a particular assessment period.

The pacing guide approach to assessment design, while it has the benefit of matching the district’s plans for instructing and assessing standards, has a tendency to narrow the focus of instruction so much that the assessment requires a large number of texts to measure very specific aspects of a text, and leaves students’ holistic comprehension of a text unmeasured. When the purpose of assessment is to measure student progress, this seems like an opportunity missed.

Measurement reliability is best served by having long tests, the longer the test the greater the reliability. For our purposes and the realities of class time available for assessment, we recommend 35-50 items per test.

When a pacing guide emphasizes a few core standards, it helps to clarify expectations for everyone, but when a test measures only a narrow range of standards, many more questions are required per standard. When these standards are spread across multiple genres, or focused on comparing or synthesizing texts, we start to see unintended and undesirable characteristics, namely the inclusion of too many texts on an assessment.

For example, to address reliability with a 35-50 item test, a pacing guide of five learning standards would require seven to ten items per standard. That doesn’t seem like many items to fully measure a standard, until we consider a concept like main character. How many “main” characters will a short story have? Standards like this often require a new text for each question. Eight or ten texts seem like an awful lot to read to assess whether a student understands the concept of a main character.

How about comparing and contrasting two texts? When the student is asked to compare and contrast across genres, or two different authors’ explorations of a similar theme, it’s an opportunity to see students demonstrate analytical skills and synthesis of information, higher-order thinking skills we want them to develop through reading. One or two questions that compare two texts, and up to 5 or 10 more questions that require analysis of each text in depth can better measure these higher-order or holistic skills than forcing students to read 10 different texts to answer 5 questions focused only on comparison.

Some pacing guides strive for balance, incorporating elements of fiction and nonfiction in each benchmark period. This is beneficial for students and teachers, allowing them to explore different forms of reading and writing, often in relation to each other. Complications in assessment occur when too few standards or standards without any overlap of genres are implemented on the same assessment. Measuring a single standard five to eight times on the morals of folklore and mythology with the rest of the test addressing nonfiction standards will result in a number of folklore texts with very few items per text and no possibility of overlap with the nonfiction standards.

So how do we address these concerns? Assessment Technology Incorporated has worked with a number of districts to develop a text packaging system that allows districts to still emphasize the essential standards that they want reflected in each benchmark, but to reduce the number of texts that appear on the tests. This is accomplished by including other learning standards that the teachers are instructing that may not appear on that benchmark period’s pacing guide but are an important component in measuring students’ overall reading comprehension. The package also balances the number of items per standard based on the occurrence of the skill in everyday reading. For example, the main character standard would get one item, compare and contrast maybe a couple, and elements of literature might get three or four, a reasonable distribution of the types of information students would see in a normal short work of fiction.

Another approach is to focus on one or two specific genres in an assessment rather than trying to address poetry, short story, persuasive text, informational text, and dramatic works in one assessment.

We can, by testing by genre or limiting repetition of POs that involve compare and contrast, cross-genre, cross-cultural, and single-instance items, reduce the number of texts.

The table below shows a test created without the text packaging approach for 2010-11, and the same test adjusted by the methods outlined above. Note that with text packaging the number of words that students had to read was cut nearly in half while the number of items on the two tests remained nearly the same. There are many benefits to fully utilizing texts as we are doing with the text-packaging approach: fewer texts for the students to read on the assessment, a more thorough demonstration of understanding of the text, less repetitive questioning, and by using fewer texts per test there are more texts available to choose from in later assessments.

*GO is a graphic organizer. It does not have a word count.

*GO is a graphic organizer. It does not have a word count.**Note this is the total number of items on the test, not the sum of the column as some items have more than one text attached.

If you are interested in exploring the text packaging options available in Galileo K-12 Online, please contact your Field Service coordinator or Karyn White in Educational Management Services to learn more.

Monday, October 17, 2011

Question and Answer

Having had conversations with many clients and prospective clients throughout the years, questions regarding the implementation of assessment and intervention can be specific to a school or district. However, some questions are similar from one district to another. Here I address some of the questions that come up regularly.

What are the benefits of online vs. offline assessment?

While there are pros and cons to both methods of administration, there are strong reasons to lean towards online administration. Online testing saves on the cost of paper (environmentally friendly) and gives immediate access to test results. These days, students are more technologically savvy and are comfortable with online testing. I’ve observed groups of very young students navigating through online testing with confidence and ease. Some could make a valid argument that they want the testing to mimic the statewide assessment (e.g., AIMS, MCAS, CSAP, CST). Our own research seems to show that whether the students take the test online or offline, it does not seem to affect our ability to predict how the students will do on the statewide assessment.

On the other hand, offline assessments can be administered to large groups of students at the same time and reading texts/items are presented in a traditional format. Access to computers may be a limiting factor that would lead to a need for offline testing. English language learners or other specific groups of students may benefit from working from the test booklet. Offline testing involves the extra steps of printing test booklets and bubble sheets and of scanning answer sheets once test administration is completed. The plain-paper scanning available for the past several years is an improvement in scanning technology which makes the scanning task much quicker.

What grade levels should be included in our district’s assessment planning?

Recent policies have led to emphasis in testing for grades three through 10. However, in every state, teachers in all grade levels are responsible to assess towards the state standards. Teachers and students at all grade levels can benefit from a comprehensive assessment system which is aligned to state standards, provides information about mastery of standards to inform a variety of decision-making questions (e.g., questions related to instruction/intervention, screening/placement, growth) and, with regard to instruction/intervention, recommends specific actions to improve student performance.

What subject areas should we be testing?

Math, reading, science, and writing frequently included in assessment plans as they encompass the core subject areas and most statewide assessment cover these areas. However, teachers in all subject areas should be encouraged to incorporate a comprehensive assessment system into their approach to instruction.

Should we build District Curriculum Aligned Assessments (DCAA) or use the Comprehensive Benchmark Assessment Series (CBAS)?

The DCAA is the optimal choice for districts that have common pacing guides (or curriculum maps) which are incorporated across the district. The DCAA are customized assessments intended to be aligned to instruction. These tests measure student accomplishment and pinpoint areas for which reteaching could be of most value.

The CBAS is designed as a comprehensive assessment to give multiple snapshots throughout the year of progress toward standards mastery. These are built by ATI using the blueprints from the statewide assessments.

How long does it take to test a student?

This depends on many factors including the length of the test, the objectives being assessed, and the number and length of the reading texts. A 40-45 item assessment will likely take a typical class period to administer. Some students will take less time and some will take more. In general, Galileo assessments are not designed to be timed. The goal is to determine what the student knows. Although it’s ultimately a district decision, enough time should be allowed for students to complete all testing.

What are the best reports for teachers and administrators?

ATI has synthesized some of the most frequently used reports into a Dashboard where teachers and administrators can easily access actionable information. For example, the Dashboard contains one-click access to the:

1. Test Monitoring reports which are in a graphical format and indicate how individual students (or the class) performed on specific assessment standards;

2. Detailed Analysis Report which links test items to state standards as well as reporting on response patterns for specific items; and

3. Intervention Alert Report which helps the teacher focus interventions by state standards and to place students into intervention groups.

I hope you found this Q&A helpful. If you have additional questions, please contact ATI at 877.442.5453 or at GalileoInfo@ati-online.com.

-Baron Reyna, Field Services Coordinator

What are the benefits of online vs. offline assessment?

While there are pros and cons to both methods of administration, there are strong reasons to lean towards online administration. Online testing saves on the cost of paper (environmentally friendly) and gives immediate access to test results. These days, students are more technologically savvy and are comfortable with online testing. I’ve observed groups of very young students navigating through online testing with confidence and ease. Some could make a valid argument that they want the testing to mimic the statewide assessment (e.g., AIMS, MCAS, CSAP, CST). Our own research seems to show that whether the students take the test online or offline, it does not seem to affect our ability to predict how the students will do on the statewide assessment.

On the other hand, offline assessments can be administered to large groups of students at the same time and reading texts/items are presented in a traditional format. Access to computers may be a limiting factor that would lead to a need for offline testing. English language learners or other specific groups of students may benefit from working from the test booklet. Offline testing involves the extra steps of printing test booklets and bubble sheets and of scanning answer sheets once test administration is completed. The plain-paper scanning available for the past several years is an improvement in scanning technology which makes the scanning task much quicker.

What grade levels should be included in our district’s assessment planning?

Recent policies have led to emphasis in testing for grades three through 10. However, in every state, teachers in all grade levels are responsible to assess towards the state standards. Teachers and students at all grade levels can benefit from a comprehensive assessment system which is aligned to state standards, provides information about mastery of standards to inform a variety of decision-making questions (e.g., questions related to instruction/intervention, screening/placement, growth) and, with regard to instruction/intervention, recommends specific actions to improve student performance.

What subject areas should we be testing?

Math, reading, science, and writing frequently included in assessment plans as they encompass the core subject areas and most statewide assessment cover these areas. However, teachers in all subject areas should be encouraged to incorporate a comprehensive assessment system into their approach to instruction.

Should we build District Curriculum Aligned Assessments (DCAA) or use the Comprehensive Benchmark Assessment Series (CBAS)?

The DCAA is the optimal choice for districts that have common pacing guides (or curriculum maps) which are incorporated across the district. The DCAA are customized assessments intended to be aligned to instruction. These tests measure student accomplishment and pinpoint areas for which reteaching could be of most value.

The CBAS is designed as a comprehensive assessment to give multiple snapshots throughout the year of progress toward standards mastery. These are built by ATI using the blueprints from the statewide assessments.

How long does it take to test a student?

This depends on many factors including the length of the test, the objectives being assessed, and the number and length of the reading texts. A 40-45 item assessment will likely take a typical class period to administer. Some students will take less time and some will take more. In general, Galileo assessments are not designed to be timed. The goal is to determine what the student knows. Although it’s ultimately a district decision, enough time should be allowed for students to complete all testing.

What are the best reports for teachers and administrators?

ATI has synthesized some of the most frequently used reports into a Dashboard where teachers and administrators can easily access actionable information. For example, the Dashboard contains one-click access to the:

1. Test Monitoring reports which are in a graphical format and indicate how individual students (or the class) performed on specific assessment standards;

2. Detailed Analysis Report which links test items to state standards as well as reporting on response patterns for specific items; and

3. Intervention Alert Report which helps the teacher focus interventions by state standards and to place students into intervention groups.

I hope you found this Q&A helpful. If you have additional questions, please contact ATI at 877.442.5453 or at GalileoInfo@ati-online.com.

-Baron Reyna, Field Services Coordinator

Monday, October 10, 2011

Using the Intervention Alert Report

The Intervention Alert Report lists all of the learning standards on a given assessment and displays the percentage of students who have demonstrated mastery of the learning standards. The learning standards listed that do not have 75 percent of students mastering them, will be highlighted in red. This allows the users to easily identify the standards on which interventions should focus. This is an actionable report that allows the user to schedule Assignments and Quizzes, or drill-down through the data to view individual Student Results.

The Intervention Alert Report provides a rich source of data for guiding evaluation. Each Intervention Alert Report provides information about what is happening in regards to students’ learning. The information on the report provides detailed data about students’ strengths as well as areas where additional intervention or planning will be beneficial. The Intervention Alert provides educators with an efficient means of tracking student mastery of different standards at many different levels of aggregation. The report can be run at the District-, School-, or Class-level. Educators may use the information to evaluate mastery of standards and to make plans accordingly.

1. Review your data… What does your data tell you about your classes and the students?

Which standards did students learn?

Which standards require additional focus to promote mastery?

What were the expectations for student learning?

Did the learning you expected occur?

2. Ask questions of your data… How can the data be used to provide learning opportunities for students that reflect class-level goals?

What mastery levels do most of your students fall into? For which standards?

Who are the students in each grouping? What Intervention Groups need to be

created?

What variables that you know of could be impacting student achievement?

3. Use your data to begin the goal setting, planning and intervention process.

What expectations do you have for learning in the months ahead? For example,

what type of movement do you hope to see from one mastery level to the next?

Which standards will you target?

What plans will be put in place to achieve these goals?

What can school personnel do to help you support individual students and their learning?

The Intervention Alert Report provides a rich source of data for guiding evaluation. Each Intervention Alert Report provides information about what is happening in regards to students’ learning. The information on the report provides detailed data about students’ strengths as well as areas where additional intervention or planning will be beneficial. The Intervention Alert provides educators with an efficient means of tracking student mastery of different standards at many different levels of aggregation. The report can be run at the District-, School-, or Class-level. Educators may use the information to evaluate mastery of standards and to make plans accordingly.

1. Review your data… What does your data tell you about your classes and the students?

Which standards did students learn?

Which standards require additional focus to promote mastery?

What were the expectations for student learning?

Did the learning you expected occur?

2. Ask questions of your data… How can the data be used to provide learning opportunities for students that reflect class-level goals?

What mastery levels do most of your students fall into? For which standards?

Who are the students in each grouping? What Intervention Groups need to be

created?

What variables that you know of could be impacting student achievement?

3. Use your data to begin the goal setting, planning and intervention process.

What expectations do you have for learning in the months ahead? For example,

what type of movement do you hope to see from one mastery level to the next?

Which standards will you target?

What plans will be put in place to achieve these goals?

What can school personnel do to help you support individual students and their learning?

Monday, October 3, 2011

Measuring Student Growth with ATI’s Instructional Effectiveness Pretests and Posttests

A primary function of ATI’s Instructional Effectiveness (IE) assessments is to evaluate the amount of growth that students demonstrate between the pretest and the posttest. The IE posttests are comprised entirely of current grade-level content while the IE pretests contain both prior grade-level and current grade-level content. Many Galileo users may wonder how the assessments can measure growth, when part of the pretest was aligned to the prior grade-level.

The IE pretests and posttests can be used to measure growth because ATI uses state-of-the-art Item Response Theory (IRT) techniques to put the student development level (DL) scores for both the pretest and the posttest on a common scale so that the scores will be directly comparable. ATI DL scores are scale scores. The calculation of scale scores takes into account the relative difficulty of the items on the assessment. For example, imagine two students take two different tests. Both get 75% correct. If one test was much easier than the other assessment, then when the students’ ability levels (DL scores) are estimated, the one who got 75% correct on the easy test should have a lower ability estimate than the student who got 75% correct on the difficult assessment. Even though both have the same raw score, the student who took the easy test will have a lower DL score than the student who took the difficult test.

When DL scores are calculated for the pretests, the prior grade-level items are treated as if they were easy items. So even though the students should do very well on those items, their DL scores will be adjusted to a slightly lower level because the IRT analysis will see that the items were very easy. The DL scores will be on a scale that is directly comparable to the posttest, and there will be room for growth in DL scores between the pretest and the posttest. In other words, if a student gets exactly the same raw score (percent correct) on the pretest and the posttest, his or her DL score on the posttest will be higher than that on the pretest because the items on the posttest will be seen as being more difficult than the items on the pretest.

There are a number of ways to learn first-hand about the benefits both of Galileo K-12 Online and of measuring student growth with instructional effectiveness pretests and posttests. You can visit the Assessment Technology Incorporated website (ati-online.com), participate in an online overview by registering either through the website or by calling 1.877.442.5453 to speak with a Field Services Coordinator, or visit us at:

- Arizona School Administrators, Inc. (ASA) Fall Superintendency/Higher Education Conference, October 23 through 25 at the Prescott Resort and Conference Center, Prescott, Arizona

- Massachusetts Computer Using Educators (MassCUE) and the Massachusetts Association of School Superintendents (M.A.S.S.) Annual Technology Conference October 26 and 27 at the Gillette Stadium, Foxborough, Massachusetts

- Arizona Charter School Association (ACSA) 16th Annual Conference November 10 and 11 at the Westin La Paloma Resort, Tucson, Arizona

- Illinois Association of School Boards (IASB), Illinois Association of School Administrators (IASA), and Illinois Association of School Business Officials (IASBO) Joint Annual Conference November 18 through 20 at the Hyatt Regency Chicago, Chicago, Illinois

The IE pretests and posttests can be used to measure growth because ATI uses state-of-the-art Item Response Theory (IRT) techniques to put the student development level (DL) scores for both the pretest and the posttest on a common scale so that the scores will be directly comparable. ATI DL scores are scale scores. The calculation of scale scores takes into account the relative difficulty of the items on the assessment. For example, imagine two students take two different tests. Both get 75% correct. If one test was much easier than the other assessment, then when the students’ ability levels (DL scores) are estimated, the one who got 75% correct on the easy test should have a lower ability estimate than the student who got 75% correct on the difficult assessment. Even though both have the same raw score, the student who took the easy test will have a lower DL score than the student who took the difficult test.

When DL scores are calculated for the pretests, the prior grade-level items are treated as if they were easy items. So even though the students should do very well on those items, their DL scores will be adjusted to a slightly lower level because the IRT analysis will see that the items were very easy. The DL scores will be on a scale that is directly comparable to the posttest, and there will be room for growth in DL scores between the pretest and the posttest. In other words, if a student gets exactly the same raw score (percent correct) on the pretest and the posttest, his or her DL score on the posttest will be higher than that on the pretest because the items on the posttest will be seen as being more difficult than the items on the pretest.

There are a number of ways to learn first-hand about the benefits both of Galileo K-12 Online and of measuring student growth with instructional effectiveness pretests and posttests. You can visit the Assessment Technology Incorporated website (ati-online.com), participate in an online overview by registering either through the website or by calling 1.877.442.5453 to speak with a Field Services Coordinator, or visit us at:

- Arizona School Administrators, Inc. (ASA) Fall Superintendency/Higher Education Conference, October 23 through 25 at the Prescott Resort and Conference Center, Prescott, Arizona

- Massachusetts Computer Using Educators (MassCUE) and the Massachusetts Association of School Superintendents (M.A.S.S.) Annual Technology Conference October 26 and 27 at the Gillette Stadium, Foxborough, Massachusetts

- Arizona Charter School Association (ACSA) 16th Annual Conference November 10 and 11 at the Westin La Paloma Resort, Tucson, Arizona

- Illinois Association of School Boards (IASB), Illinois Association of School Administrators (IASA), and Illinois Association of School Business Officials (IASBO) Joint Annual Conference November 18 through 20 at the Hyatt Regency Chicago, Chicago, Illinois

Subscribe to:

Posts (Atom)