The Galileo G3 Assessment Scales for children ages 3-5 are fully articulated to the Head Start Child Development and Early Learning Framework and represent the developmental building blocks that are most important for a child's school and long-term success.

With hundreds of Galileo G3 Activities aligned to the Galileo G3 Assessment Scales, programs have access to a full range of theme-based curriculum options for use in large and small groups and for individual learning opportunities. Galileo offers hundreds of learning activities which are based on findings from many years of ATI research in the field of preschool learning. Because of the valuable role storybooks can play in the development of young learners, many of the Galileo G3 Activities incorporate storybooks either in a primary role in the activity, as an enrichment opportunity, or as a suggested supplement.

Learn more about how Galileo technology meets the requirements of the Head Start Framework and Monitoring Protocol.

Learn more about Galileo Pre-K Online Curriculum offerings.

Wednesday, December 26, 2012

Tuesday, December 11, 2012

How do you compare benchmarks when the questions and standards are not the same? Can the results from one benchmark to another be compared?

You can compare the results from one Galileo benchmark test to another when the questions and/or standards are not the same because Galileo benchmark test scores are placed on a common scale. ATI uses standard scaling techniques available through Item Response Theory (IRT) to accomplish the scaling task. The scaling process utilizes information regarding item characteristics and their relationship to student ability. The relationship between student ability and item difficulty plays a particularly important role in the scaling process. IRT places the student ability score and item difficulty on the same scale. For example, in IRT a student of average ability will have a fifty-fifty chance of responding correctly to an item of average difficulty. Students who are above average in ability will have a fifty-fifty chance of responding correctly to a corresponding item that is of above average difficulty. Likewise, a student of below average ability will have a fifty-fifty chance of responding correctly to a corresponding item that is below average in difficulty.

The fact that ability and difficulty are measured on the same scale makes it possible to adjust the estimate of ability based on the difficulty of the items included in the assessment. This adjustment is a key factor in the scaling process making it possible to compare scores from different tests. When scores such as percent correct are used, such adjustment is not possible. For example, if a student received a score of 80 percent correct on one test and 90 percent correct on a second test, the difference could have occurred because the second test was easier than the first, or because of an increase in student performance, or both.

A district-level user can obtain information about item difficulty and other item parameters for each item on a benchmark test by generating the Item Parameters Report for the benchmark test in question.

Click here to watch video.

ATI uses psychometric techniques to guide the construction and validation of measurement instruments such as a benchmark assessment administered by districts. Psychometrics is the field of study that deals with the theory and technique of measurement of knowledge, abilities, and educational measurement.

ATI begins analyzing data once the benchmark test is given and the testing window is closed. Districts continue to have access to several other reports, such as the Test Monitoring, Item Analysis, and Intervention Alert reports. ATI performs an analysis using psychometric techniques based in Item Response Theory (IRT) to create Galileo Developmental Level (DL) scores.

View the new “The Psyche of Psychometrics” video to learn more.

Read more about Galileo benchmark assessments.

The fact that ability and difficulty are measured on the same scale makes it possible to adjust the estimate of ability based on the difficulty of the items included in the assessment. This adjustment is a key factor in the scaling process making it possible to compare scores from different tests. When scores such as percent correct are used, such adjustment is not possible. For example, if a student received a score of 80 percent correct on one test and 90 percent correct on a second test, the difference could have occurred because the second test was easier than the first, or because of an increase in student performance, or both.

A district-level user can obtain information about item difficulty and other item parameters for each item on a benchmark test by generating the Item Parameters Report for the benchmark test in question.

Click here to watch video.

ATI uses psychometric techniques to guide the construction and validation of measurement instruments such as a benchmark assessment administered by districts. Psychometrics is the field of study that deals with the theory and technique of measurement of knowledge, abilities, and educational measurement.

ATI begins analyzing data once the benchmark test is given and the testing window is closed. Districts continue to have access to several other reports, such as the Test Monitoring, Item Analysis, and Intervention Alert reports. ATI performs an analysis using psychometric techniques based in Item Response Theory (IRT) to create Galileo Developmental Level (DL) scores.

View the new “The Psyche of Psychometrics” video to learn more.

Read more about Galileo benchmark assessments.

Monday, November 26, 2012

Customizable Rating Scales and Actionable Dashboard Reporting Technology

School districts across several states have expressed the need to develop, customize, administer and manage implementation of their own teacher and principal evaluation tools. The Galileo Instructional Effectiveness Assessment System (IEAS) addresses this need by giving school districts online access to the Administrator Dashboard with instructional effectiveness widgets.

Among other tools, the dashboard includes: 1) an Educator Proficiency Scale Builder; 2) Proficiency Rating Scale Administration tools; 3) an Educator Proficiency Ratings Progress Report; 4) a Proficiency Rating Results Report; and 5) Staff File Import and View functionality.

These features make it possible for a district to take control over the development and use of teacher and principal performance rating scales aligned to the Interstate New Teachers Assessment and Support Consortium (INTASC) Professional Teaching Standards and the Professional Administrative Standards from the Interstate School Leaders Licensure Consortium (ISLLC).

In addition, if the district already has a measure of teacher performance, they may upload data from this existing measure collected outside of Galileo or enter the existing measure (with appropriate copyright permissions if applicable) into Galileo for administration and data collection within the system.

Galileo IEAS Dashboard Reports provide school districts with the capability to manage the implementation of rating scales and to gain rapid access to a continuous flow of actionable information not only to identify the proficiency levels of teachers and administrators at the end of the year, but also, to inform professional development decisions to enhance student learning throughout the year.

For more information of the components of the Galileo IEAS, click here.

Monday, November 19, 2012

Tips for Effective Test Reviews

ATI provides districts the opportunity to review district

created assessments. This process can be an easy and a valuable tool to

ensure that assessments suit specific district and student needs. Listed

below are tips for effective test reviews.

1) Keep

the purpose of the assessment in mind.

There are numerous purposes for assessments.

The purpose of the assessment should dictate the review. Look at an

example from the kindergarten Common Core math standards.

2) Trust

the data.

3) Consider

the bigger picture.

Every teacher teaches concepts using favorite

vocabulary and language. For this reason, reviewers approach item review

expecting to see specific target words on items or wanting to see questions

asked in specific formats. Keep in mind that the target words may change

as the tests and teaching methodologies vary over time. The bigger

picture for students is that they are able to perform the skill and demonstrate

knowledge no matter how the item is presented on the high stakes

assessments. This is going to be especially important as the traditional

state tests transition into the new common assessments created by the

consortiums SMARTTER and PARCC. As vocabulary and item formats change, it will

be beneficial for students to have been exposed to multiple formats for testing

specific standards. Consider keeping an item on an assessment even if it

contains new or different vocabulary and allowing students to gain valuable

experience in test taking and at the same time expand their knowledge.

Reviewers may be surprised at how adaptable students really are.

4) Allow

the students the opportunity to excel.

One trap districts fall into is creating assessments

that are too easy. If assessments are too easy, there is no way for the

data to show growth from one test to the next. In addition, if all students

receive 100 percent on the assessment, the data will not provide

information about how to help students get to the next level. Avoiding this

pitfall is relatively easy. Reviewers should make sure that items having

a full range of difficulty are included on the assessment.

Monday, November 12, 2012

ATI Findings on Predictive Validity and Forecasting Accuracy for the 2011-12 School Year

ATI has released a research brief summarizing current research on the predictive validity of Galileo K-12 Online assessments administered in the 2011-12 school year and the forecasting accuracy of Galileo risk levels based on student performance on these assessments.

The research summarized in the brief was based on data for individual students in grades three through high school in math, reading/English language arts, and science. The sample consisted of the first 26 districts in Arizona, Colorado, and Massachusetts to provide ATI with their statewide assessment data. Collectively, these districts administered 1,105 district-wide assessments.

ATI conducts an Item Response Theory (IRT) analysis for each district-wide assessment which produces a scale score for each student, the Developmental Level (DL) score. Each student is also classified as to their level of risk of failing the statewide assessment based on their performance on all the district-wide assessments they have taken within a given school year. In order of highest to lowest risk of failing the statewide assessment, the possible risk levels comprise “High Risk,” “Moderate Risk,” “Low Risk,” and “On Course.” ATI then evaluates predictive validity by examining the correlation between student DL scores on each district-wide assessment and student scores on the statewide assessment. ATI evaluates forecasting accuracy by examining how students classified at different levels of risk ultimately performed on the statewide assessment.

“Predictive validity analyses examine the strength of the relationship between two measures of student performance, in this case the student DL scores on an assessment in a given grade and content area and the student scores on the statewide assessment in the same grade and content area,” says brief author Sarah Callahan, Ph.D., Research Scientist of Assessment Technology Incorporated. She further states that “The observed correlations in the 26 districts studied suggest that student scores on the 2011-12 Galileo district-wide assessments were strongly related to student scores on the 2012 statewide assessment.”

Key findings include:

The research summarized in the brief was based on data for individual students in grades three through high school in math, reading/English language arts, and science. The sample consisted of the first 26 districts in Arizona, Colorado, and Massachusetts to provide ATI with their statewide assessment data. Collectively, these districts administered 1,105 district-wide assessments.

ATI conducts an Item Response Theory (IRT) analysis for each district-wide assessment which produces a scale score for each student, the Developmental Level (DL) score. Each student is also classified as to their level of risk of failing the statewide assessment based on their performance on all the district-wide assessments they have taken within a given school year. In order of highest to lowest risk of failing the statewide assessment, the possible risk levels comprise “High Risk,” “Moderate Risk,” “Low Risk,” and “On Course.” ATI then evaluates predictive validity by examining the correlation between student DL scores on each district-wide assessment and student scores on the statewide assessment. ATI evaluates forecasting accuracy by examining how students classified at different levels of risk ultimately performed on the statewide assessment.

“Predictive validity analyses examine the strength of the relationship between two measures of student performance, in this case the student DL scores on an assessment in a given grade and content area and the student scores on the statewide assessment in the same grade and content area,” says brief author Sarah Callahan, Ph.D., Research Scientist of Assessment Technology Incorporated. She further states that “The observed correlations in the 26 districts studied suggest that student scores on the 2011-12 Galileo district-wide assessments were strongly related to student scores on the 2012 statewide assessment.”

Key findings include:

- The mean correlations range from 0.69 to 0.78 across grades and content areas with an overall mean of 0.75 which is considered a high correlation.

- As student risk level increased the likelihood of failure on the statewide assessment increased, as illustrated in Figure 1.

- Overall Galileo risk levels accurately forecast statewide test performance for 84 percent of students as shown in Figure 2.

- Forecasting accuracy was highest in cases where student performance was most consistent.

Monday, November 5, 2012

Striving for Educational Excellence: The Roles of Innovation, Collaboration, and Empowerment

Educational excellence is essential when maintaining and expanding the nation’s competitiveness in today’s rapidly changing global community. The challenges associated with attaining and maintaining educational excellence call for innovative and collaborative initiatives empowering teachers as educators, administrators as leaders, and students as learners.

In support of attaining and maintaining educational excellence, ATI provides educators with a comprehensive instructional effectiveness system built in collaboration with school districts and in recognition that it is within the local school district and community where the broad sweeping ideas of educational reform are carefully vetted within the context of local reality. It is here where educational stakeholders have an in-depth understanding of the educational needs of students and the professional development aspirations of teachers. And it is here where new policies are transformed into everyday practice. Reform is, after all, a local phenomenon, occurring within each school and within each classroom, one student and one teacher at a time.

Click here to learn more about the ways in which the ATI Instructional Effectiveness Assessment System embedded in the Galileo K-12 Online Instructional Improvement System incorporates innovation and collaboration in empowering educators seeking educational excellence.

In support of attaining and maintaining educational excellence, ATI provides educators with a comprehensive instructional effectiveness system built in collaboration with school districts and in recognition that it is within the local school district and community where the broad sweeping ideas of educational reform are carefully vetted within the context of local reality. It is here where educational stakeholders have an in-depth understanding of the educational needs of students and the professional development aspirations of teachers. And it is here where new policies are transformed into everyday practice. Reform is, after all, a local phenomenon, occurring within each school and within each classroom, one student and one teacher at a time.

Click here to learn more about the ways in which the ATI Instructional Effectiveness Assessment System embedded in the Galileo K-12 Online Instructional Improvement System incorporates innovation and collaboration in empowering educators seeking educational excellence.

Monday, October 29, 2012

C.E. Rose Data-Based Success Story

In under a decade, C.E. Rose Elementary School in the Tucson Unified School District in Tucson, Arizona, has gone from approximately 20 to 30 percent of its students passing the state assessment, to more than 70 percent passing in both reading and math. The school’s educators believe that these impressive gains occurred thanks to active engagement and motivation of students, planning and collaboration, and utilizing Galileo K-12 Online data to induce interventions. “With [Galileo] ... you look at the data and it gives you the motivation you need to keep working toward your ultimate goal ... And I think that’s been one of the most powerful things,” said Rose’s principal, Stephen Trejo. Through ongoing evaluation of its success, the school’s staff has plans to continue down this path of achievement.

*In 2010 the state of Arizona introduced a new scale for statewide test scores for mathematics as well as a new standard for proficiency that was not aligned to the previous standard. Therefore, student performance on the statewide assessment in mathematics in 2010 and 2011 is not compared to student performance in years prior to 2010.

Read more about C.E. Rose and its data-based success.

Read more data-based success stories.

*In 2010 the state of Arizona introduced a new scale for statewide test scores for mathematics as well as a new standard for proficiency that was not aligned to the previous standard. Therefore, student performance on the statewide assessment in mathematics in 2010 and 2011 is not compared to student performance in years prior to 2010.

Read more about C.E. Rose and its data-based success.

Read more data-based success stories.

Labels:

Galileo K-12 Online,

success story

Tuesday, October 23, 2012

Custom Test Report

The Custom Test Report is a valuable tool for viewing formative and benchmark test results, including student demographic data that can be used to compare result sets. Filtering and sorting data within a Custom Test Report is easy using Excel. To benefit from the power of this tool, just create your custom test report as desired.

After creating the report, click the link to show “Custom Test Report Activity”:

On the main Custom Test Report activity page, wait for your report name to appear on the list and click the filename to open the report:

The file will open in your default text editor. Next, use the keystroke combination “Control+A” to select all text in the file, then use keystroke “Control+C” to copy the text to your computer’s clipboard.

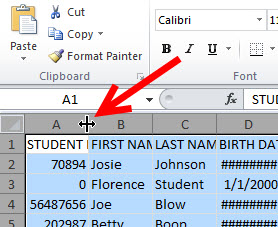

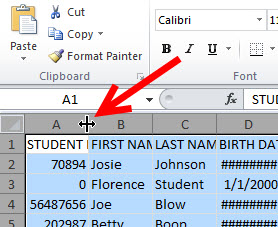

Once the content is copied to the clipboard, open Excel and select cell A1, then use the keystroke combination “Control+V” to paste the content into the spreadsheet. Left-click in the cell above and to the left of A1 to highlight the entire sheet, then double left-click on the border between columns A and B to expand all columns to the appropriate width:

Next, just click on “Home” -> “Sort & Filter” -> “Filter” to set the auto-filtering:

Once this is complete, choose any filtering value you wish. For example, filtering based on grade levels is done by clicking the down-arrow at the top of the appropriate column and then eliminating all undesired grade levels:

Note the report is now filtered appropriately by removing all non-fourth-grade students:

Alternatively, you can sort data within any column by selecting the desired column and using the “Sort & Filter” button selections:

For more information or a quick reference guide on how to create a Custom Test Report, please contact your Field Services Coordinator.

After creating the report, click the link to show “Custom Test Report Activity”:

On the main Custom Test Report activity page, wait for your report name to appear on the list and click the filename to open the report:

The file will open in your default text editor. Next, use the keystroke combination “Control+A” to select all text in the file, then use keystroke “Control+C” to copy the text to your computer’s clipboard.

Once the content is copied to the clipboard, open Excel and select cell A1, then use the keystroke combination “Control+V” to paste the content into the spreadsheet. Left-click in the cell above and to the left of A1 to highlight the entire sheet, then double left-click on the border between columns A and B to expand all columns to the appropriate width:

Next, just click on “Home” -> “Sort & Filter” -> “Filter” to set the auto-filtering:

Once this is complete, choose any filtering value you wish. For example, filtering based on grade levels is done by clicking the down-arrow at the top of the appropriate column and then eliminating all undesired grade levels:

Note the report is now filtered appropriately by removing all non-fourth-grade students:

Alternatively, you can sort data within any column by selecting the desired column and using the “Sort & Filter” button selections:

For more information or a quick reference guide on how to create a Custom Test Report, please contact your Field Services Coordinator.

Labels:

benchmark,

formative assessment,

Galileo reports

Monday, October 15, 2012

Do you know the benefits of implementing the Galileo Instructional Effectiveness Assessment System?

The premise behind instructional effectiveness initiatives is that good teaching and effective educational management can enhance student learning. The Galileo Instructional Effectiveness Assessment System (IEAS) provides users both analysis and reporting of student achievement data and tools, content, and data analysis to develop effective and defensible educator/administrator evaluation systems. The customizable tools in the IEAS are aligned to educator effectiveness scales such as the Interstate Teacher Assessment and Support Consortium (INTASC), Model Core Teaching Standards, the Interstate School Leaders Licensure Consortium (ISLLC) Educational Leadership Policy Standards, and individual state department of education standards. Development of the Galileo IEAS has been based both on our more than 25 years of experience and on the needs and requests of users

A brief summary of the benefits provided by the Galileo IEAS is outlined below. Further details regarding benefits are available in the 2012 white paper titled Instructional Effectiveness Assessment.

Benefits include:

We look forward to talking with you online and at events.

A brief summary of the benefits provided by the Galileo IEAS is outlined below. Further details regarding benefits are available in the 2012 white paper titled Instructional Effectiveness Assessment.

Benefits include:

- Reliable and valid IEAS assessments in math, reading/English language arts (ELA), science, and writing.

- Standards for non-state-tested grades and subjects are in the system, including subjects such as foreign languages and social studies - meaning instructional effectiveness (IE) assessments can be created and aligned to these subjects within Galileo using items resulting from the Community Item Banking and Assessment Development Project. As the banks are becoming robust, project participants may need to contribute items to the bank when building an assessment.

- Analysis techniques and reports to evaluate student achievement data in the context of educator evaluation.

- Support for IE assessment in non-state-tested content areas such as music, art, and physical education.

- Tools to design customized educator rating scales aligned to educator effectiveness scales such as the INTASC Model Core Teaching Standards, the ISLLC Educational Leadership Policy Standards, and individual state department of education standards.

- Tools to record and report on data from observations, interviews, and other data sources to rate each teacher and administrator on the elements in the rating scales.

- Tools with which each school district may customize the relative weights applied to evaluation scores as recommended by individual state departments of education. These tools allow districts to adjust the weights applied to student performance data and educator/administrator rating elements to determine final effectiveness scores.

- Algorithms and reporting tools that summarize student performance data and educator/administrator evaluation data from many sources to generate an Overall Evaluation Score for each teacher and administrator.

- visit the ATI website (ati-online.com)

- participate in an online demonstration by registering either through the website or by calling 1.877.442.5453 / 520.323.9033 to speak with a Field Services Coordinator

- visit us at

- 52nd Annual California Educational technology Professional Association (CETPA) Conference October 16-19 at the Monterey Convention Center, Exhibit #K16 in Monterey, California.

- Massachusetts Computer Using Educators and Massachusetts Association of School Superintendents Annual Technology Conference October 24 and 25 at the Gillette Stadium, Exhibit #418 in Foxborough, Massachusetts.

- 25th Annual Arizona Educational Research Association (AERO) Conference October 26 presenting the luncheon keynote,“Building a Research-Based Approach to Support Local Educational Agency Implementation of the Arizona Framework for Measuring Educator Effectiveness,” Phoenix, Arizona.

We look forward to talking with you online and at events.

Monday, October 8, 2012

Planning for Parent/Teacher Conferences

As the school year continues to roll on at an increasing pace, it is time to start thinking about one of the most important times of year in the classroom. In many cases we’ve run straight through the first quarter and are bearing down on the end of the first semester. Many schools are beginning to think about planning for parent/teacher conferences. So what are the best tools we can provide parents with for these meetings? There are a couple of reports and documents that teachers can bring with them to help illustrate to the parents or guardians where the student is and what he or she can work on.

Student Assessment History – The Assessment History Report is a useful tool to summarize the student’s full performance. The report can be run with benchmark, formative, and external test data available. The teachers can give the parents a nice snapshot of their student’s performance so far in the school year.

Individual Development Profile – This is another report that interested parents might find useful. This report is actually linked on the Student Assessment History Report, and it breaks down an individual student’s performance on each standard tested on the most recent assessment.

Student Multi-Test – As you may or may not be aware, a very informative report in Galileo is the Aggregate Multi-Test Report. This is report provides projections for student performance and places scores on a common scale, complete with Risk Analysis and a handy graph. What you may not be aware of is the fact that there is the exact same report that can be run on a specific student.

Psychometrics Quick Reference Guide – Now that the parents have seen the Student Assessment History and the Student Multi-Test reports, they may have a decent understanding of where their student stands, but they also might like some more information on how these developmental level scores are created and what they mean. The psychometrics quick reference guide – available within the Professional Development Forum within Galileo – will help to explain how these scores are generated.

Parent Quick Reference Guide – There are also many documents that can help a parent feel more involved in their student’s education, one such document is the parent-student center quick reference guide – available within the Professional Development Forum within Galileo – that walks the parent or guardian through the K-12 Student-Parent Center. For districts that choose to share class information, teacher information, notes, and more within the K-12 Student-Parent Center, this would serve as a great tool for outlining what type of information can be found there.

Ensuring that the teachers have the most important information at the ready and the ability to help relay that to the parents will help to get the parents more involved in their student’s education and open the lines for communication.

-Christopher Domschke

Field Services Coordinator

Student Assessment History – The Assessment History Report is a useful tool to summarize the student’s full performance. The report can be run with benchmark, formative, and external test data available. The teachers can give the parents a nice snapshot of their student’s performance so far in the school year.

Individual Development Profile – This is another report that interested parents might find useful. This report is actually linked on the Student Assessment History Report, and it breaks down an individual student’s performance on each standard tested on the most recent assessment.

Student Multi-Test – As you may or may not be aware, a very informative report in Galileo is the Aggregate Multi-Test Report. This is report provides projections for student performance and places scores on a common scale, complete with Risk Analysis and a handy graph. What you may not be aware of is the fact that there is the exact same report that can be run on a specific student.

Psychometrics Quick Reference Guide – Now that the parents have seen the Student Assessment History and the Student Multi-Test reports, they may have a decent understanding of where their student stands, but they also might like some more information on how these developmental level scores are created and what they mean. The psychometrics quick reference guide – available within the Professional Development Forum within Galileo – will help to explain how these scores are generated.

Parent Quick Reference Guide – There are also many documents that can help a parent feel more involved in their student’s education, one such document is the parent-student center quick reference guide – available within the Professional Development Forum within Galileo – that walks the parent or guardian through the K-12 Student-Parent Center. For districts that choose to share class information, teacher information, notes, and more within the K-12 Student-Parent Center, this would serve as a great tool for outlining what type of information can be found there.

Ensuring that the teachers have the most important information at the ready and the ability to help relay that to the parents will help to get the parents more involved in their student’s education and open the lines for communication.

-Christopher Domschke

Field Services Coordinator

Monday, October 1, 2012

ATI and the Head Start Portrait of Child Outcomes

The Head Start Portrait of Child Outcomes (Portrait) is a grass roots initiative with Assessment Technology Incorporated (ATI) in which Head Start programs join together, pool their data, and share information with the public about children's learning and development. The Portrait reflects the 11 Domains and 37 Domain Elements comprising the Head Start Child Development and Learning Framework (Framework) for children three to five years old.

The role of ATI is to continuously analyze and aggregate data gathered by programs and to display the data in reports documenting children's learning and progress on the Domains and Domain Elements. Portrait data are recorded online by Head Start programs and are available in real-time for reporting purposes. Thus, there is a unique opportunity to provide the Head Start community, researchers, policy-makers, and the public with a continuous and changing portrait of outcomes reflecting the course of children's learning throughout the program year.

Head Start programs throughout the nation are already using information on child outcomes in new ways to improve learning. It is the goal of participating programs and the goal of ATI that the information provided through the Head Start multi-state Portrait will be helpful to all of those individuals who are concerned with and dedicated to the challenge of promoting the development of our nation's children.

Register to participate in* or learn more about the Head Start Portrait of Child Outcomes

*By participating, your program's Galileo Online demographic and assessment data will be included in the aggregated data for the Portrait of Child Outcomes. Your program name will be listed as a participant, but no data specific to your program, classes, or children will be shared or identified.

The role of ATI is to continuously analyze and aggregate data gathered by programs and to display the data in reports documenting children's learning and progress on the Domains and Domain Elements. Portrait data are recorded online by Head Start programs and are available in real-time for reporting purposes. Thus, there is a unique opportunity to provide the Head Start community, researchers, policy-makers, and the public with a continuous and changing portrait of outcomes reflecting the course of children's learning throughout the program year.

Head Start programs throughout the nation are already using information on child outcomes in new ways to improve learning. It is the goal of participating programs and the goal of ATI that the information provided through the Head Start multi-state Portrait will be helpful to all of those individuals who are concerned with and dedicated to the challenge of promoting the development of our nation's children.

Register to participate in* or learn more about the Head Start Portrait of Child Outcomes

*By participating, your program's Galileo Online demographic and assessment data will be included in the aggregated data for the Portrait of Child Outcomes. Your program name will be listed as a participant, but no data specific to your program, classes, or children will be shared or identified.

Labels:

Galileo Pre-K Online,

Portrait

Monday, September 24, 2012

Rising to Meet Common Core Expectations for Text Rigor and Content

Common Core State Standards (CCSS) for English language arts (ELA) stress that students read more complex texts. There are several reasons for this:

How ATI ELA Content Specialists Are Responding to CCSS Text Expectations

ATI ELA content specialists are working to meet CCSS expectations for text complexity. This is exemplified by the development of content for RI 11.9, a performance objective which calls for students to “[a]nalyze seventeenth-, eighteenth-, and nineteenth-century foundational U.S. documents of historical and literary significance (including The Declaration of Independence, the Preamble to the Constitution, the Bill of Rights, and Lincoln’s Second Inaugural Address) for their themes, purposes, and rhetorical features.”

The performance objective requires that students engage in rigorous analysis of a prescribed range of texts. ELA selected George Washington’s farewell address for the beauty and complexity of its writing and for its historical importance. Items were written with the intent to guide students’ analysis as they read the address, similar to the way teachers use carefully timed and phrased glosses and questions to call students’ attention to key aspects of texts. Since the performance objective does not limit the type of analysis students should bring to bear on texts, the items written to the address require a range of skills and thought processes:

ATI ELA content specialists’ work in developing a robust library of sophisticated expository texts is ongoing. Recent item families added include the Emancipation Proclamation (ninth grade) and several Supreme Court opinions (Brown v. Board of Education [10th grade], Justice Murphy’s dissent in Korematsu v. United States [11th grade], and Gideon v. Wainright [12th grade]). ELA content specialists are also adding complex expository texts for the lower grades, including an illustrated text about dandelions (“Dandelions”) for first grade; a biography of Abraham Lincoln (“The Man Who Taught Himself to Be President”) and a text about helium (“Up, Up and Away: The Story of Helium”), both for third grade; a text about year-round education (“The Benefits of Year-Round Education”) for sixth grade; and a text about hummingbirds (“Flower-Kissers”) for eighth grade.

Lucas Schippers, Ph.D.

Content Specialist

Assessment Technology Incorporated

- Research indicates that pedagogy that focuses on higher-order or critical thinking at the expense of reading complex texts leaves students unprepared for college-level work.

- The texts read by K-12 students have declined in sophistication over the last 50 years, while the complexity of college and workplace texts has remained steady or even increased during the same span of time.

- K-12 students read far more narrative text than expository text, despite the fact that expository text is more challenging for students to read and is the majority of what they are expected to read in college and in the workplace.

- When students do read expository texts, they are usually only asked to skim and scan for specific information.

How ATI ELA Content Specialists Are Responding to CCSS Text Expectations

ATI ELA content specialists are working to meet CCSS expectations for text complexity. This is exemplified by the development of content for RI 11.9, a performance objective which calls for students to “[a]nalyze seventeenth-, eighteenth-, and nineteenth-century foundational U.S. documents of historical and literary significance (including The Declaration of Independence, the Preamble to the Constitution, the Bill of Rights, and Lincoln’s Second Inaugural Address) for their themes, purposes, and rhetorical features.”

The performance objective requires that students engage in rigorous analysis of a prescribed range of texts. ELA selected George Washington’s farewell address for the beauty and complexity of its writing and for its historical importance. Items were written with the intent to guide students’ analysis as they read the address, similar to the way teachers use carefully timed and phrased glosses and questions to call students’ attention to key aspects of texts. Since the performance objective does not limit the type of analysis students should bring to bear on texts, the items written to the address require a range of skills and thought processes:

- Analysis of the meaning of archaic, technical, and figurative language using contextual clues (e.g., “In the first paragraph, what does the phrase ‘clothed with that important trust’ mean?”)

- Summary or paraphrasing of complex, important passages (e.g., “Read the quotation. ‘...and that in withdrawing the tender of service, which silence in my situation might imply, I am influenced by no diminution of zeal for your future interest, no deficiency of grateful respect for your past kindness....’ Which best paraphrases the quotation?”)

- Analysis of Washington’s rhetorical strategies (e.g., “Read the quotation. ‘If benefits have resulted to our country from these services....’ What does Washington accomplish through the use of the passive voice?”)

- Application of historical knowledge to aid in comprehension of the text (e.g., “Read the quotation. ‘You have in a common cause fought and triumphed together....’ Which event is Washington referring to?”)

- Inferring background information based on contextual clues (e.g., “What can you infer from the fifth paragraph about America in 1796?”)

ATI ELA content specialists’ work in developing a robust library of sophisticated expository texts is ongoing. Recent item families added include the Emancipation Proclamation (ninth grade) and several Supreme Court opinions (Brown v. Board of Education [10th grade], Justice Murphy’s dissent in Korematsu v. United States [11th grade], and Gideon v. Wainright [12th grade]). ELA content specialists are also adding complex expository texts for the lower grades, including an illustrated text about dandelions (“Dandelions”) for first grade; a biography of Abraham Lincoln (“The Man Who Taught Himself to Be President”) and a text about helium (“Up, Up and Away: The Story of Helium”), both for third grade; a text about year-round education (“The Benefits of Year-Round Education”) for sixth grade; and a text about hummingbirds (“Flower-Kissers”) for eighth grade.

Lucas Schippers, Ph.D.

Content Specialist

Assessment Technology Incorporated

Monday, September 17, 2012

Innovative Technology Supporting Innovative Education

ATI is committed to continuous innovation - evident through a number of existing patents and several pending. During the past year, ATI has built hundreds of new features into Galileo Online, provided at no additional cost to all clients. This fall ATI will release a complete Instructional Effectiveness System, again at no additional cost to Galileo users. The system includes three components:

The comprehensive assessment component provides continuous feedback to guide professional development during the school year while there is still time to make a difference in student learning.

There are a number of ways to learn first-hand about Galileo K-12 Online. You can:

- comprehensive assessment providing reliable and valid measures of student progress used to determine the value added to student performance by each educator being evaluated;

- educator rating scales built using ATI assessment planning, item banking, scale construction, and Item Response Theory (IRT) technology making it possible to accommodate customized scales that can be continuously updated to improve rating scale validity;

- an Evaluation Score Compiler combining and differentially weighting indicators of student progress, educator proficiency, and surveys of school climate to produce an instructional effectiveness score for each educator being evaluated.

The comprehensive assessment component provides continuous feedback to guide professional development during the school year while there is still time to make a difference in student learning.

There are a number of ways to learn first-hand about Galileo K-12 Online. You can:

- visit the ATI website (ati-online.com)

- participate in an online demonstration by registering either through the website or by calling 1.877.442.5453 / 520.323.9033 to speak with a Field Services Coordinator

- visit us at

- Missouri School Boards’ Association in cooperation with Missouri Association of School Administrators Annual Conference September 28 and 29 at the Tan-Tar-A Resort, Osage Beach, Missouri.

- Massachusetts Computer Using Educators and Massachusetts Association of School Superintendents Annual Technology Conference October 24 and 25 at the Gillette Stadium, Foxborough, Massachusetts.

Monday, September 10, 2012

Galileo Online Pre-K Parent Center

Preschoolers are active and continuously engaged learners

finding any environment an appropriate place to explore, discover, and grow.

This love of learning bubbles over into all areas of a child’s life. A strong

partnership between parents and educators may significantly enhance children’s development

by allowing a complimentary approach to learning both at home and in the

classroom. The Galileo Pre-K Parent Center offers a dynamic tool that supports

a family’s engagement in their child’s educational experience.

The Pre-K Parent Center is a secured area within Galileo

Pre-K Online and offers educators a platform to communicate with families

through posting notes and lesson plans. Through the center, parents have access

to up-to-date information about their child’s learning and classroom

experiences. The Center also allows parents to print both At-Home Activities to

share with their child and up-to-date reports about his or her developmental

progress. Through the Pre-K Parent

Center family involvement and engagement in a child’s education can be

supported and monitored. Family involvement in a child’s education is an educational

opportunity for the child and an important reporting component for Head Start

and Early Head Start programs.

Accessing the Pre-K Parent Center is quick and easy through

the Assessment Technology Incorporated home page using a username and password

provided by the child’s teacher.

For more information on the Pre-K Parent Center, contact an

ATI Field Services Coordinator at 1.877.358.7611 or at

GalileoInfo@ati-online.com.

Labels:

Galileo Pre-K Online,

Parent Center

Tuesday, September 4, 2012

Innovation, Collaboration, and Empowerment: Essential Elements for Developing High-Quality Assessments in Non-State-Tested Subjects

In this new age of educational reform it is widely acknowledged that local empowerment, collaboration, and innovation are essential elements for building and sustaining success for students. For example, while state and federal agencies define and deploy large-scale, end-of-year assessments for use in high-stakes testing initiatives and while national consortia groups plan to follow suit, the educational imperative for reform is really at the local school district level and calls for the development and use of assessment tools in all subject areas (e.g., art, music, foreign languages, social studies, etc.) on a continuous basis to inform and empower instructional decision-making.

It is here at the local level, and more specifically, within the classroom where education across all subject areas occurs. It is here, where the use of reliable and valid assessments in all subject areas to help inform instruction needs to happens regularly. And it is here where the intelligent integration between assessment, reporting, and decision-making leads to action aimed at elevating student learning. In this regard, assessment planning and test construction in all subject areas must clearly articulate local education goals and local pacing calendars reflecting the scope and sequence of instruction for the school year. Moreover, assessment tools in non-state-tested areas must be sufficiently flexible and readily adaptable in ways that accommodate both current and future assessment needs.

Accomplishing these goals requires a change in direction – a change that calls for moving beyond the inherent limitations of fixed, static tests toward a more dynamic, collaborative, and locally empowered approach to assessment including the components of item development, item banking, test construction, and psychometric validation. This can be accomplished by taking advantage of the benefits afforded to local school districts and charter schools through new innovations in technology, research, and professional development currently being implemented by Assessment Technology Incorporated (ATI) in partnership with the educational community. For example, these innovations now make it possible and practical for school districts and charter schools to come together as a community and to realize their goals for developing and deploying reliable, valid, and fair assessments in subject areas not tested on statewide assessments.

Now in its first full year of implementation across several states, the ATI Community Assessment and Item Banking Project is a way for school districts and charter schools to join together at the grass-roots level to develop a continuously expanding community item bank and locally designed “best-fit” reliable and valid assessments in non-state-tested subject areas. As part of the Project, ATI is assisting in the preparation of educators to develop high-quality items and is providing services related to professional development and training, standards-aligned item and assessment development, and data analysis research and reporting tools. As a result, participating districts and charter schools gain direct access to a continually growing repository of shared locally-written, high-quality certified items and customized assessments in areas not currently addressed on statewide tests.

Participants in the Project benefit from the contributions of all participating districts and charter schools as well as the professional development provided by ATI, access to the Galileo Assessment Planner and Galileo Bank Builder technology tools and the research provided by ATI to help ensure the reliability and validity of assessments in non-state-tested subject areas.

What are your thoughts about this initiative? Please leave a comment to this post.

Interested in learning more or participating? Contact us at 1.877.442.5453 or at Galileolnfo@ati-online.com and go to: http://ati-online.com/pdfs/CommunityItemBankingProjectFAQ.pdf

- Jason K. Feld, Ph.D.

ATI Vice President Corporate Projects

It is here at the local level, and more specifically, within the classroom where education across all subject areas occurs. It is here, where the use of reliable and valid assessments in all subject areas to help inform instruction needs to happens regularly. And it is here where the intelligent integration between assessment, reporting, and decision-making leads to action aimed at elevating student learning. In this regard, assessment planning and test construction in all subject areas must clearly articulate local education goals and local pacing calendars reflecting the scope and sequence of instruction for the school year. Moreover, assessment tools in non-state-tested areas must be sufficiently flexible and readily adaptable in ways that accommodate both current and future assessment needs.

Accomplishing these goals requires a change in direction – a change that calls for moving beyond the inherent limitations of fixed, static tests toward a more dynamic, collaborative, and locally empowered approach to assessment including the components of item development, item banking, test construction, and psychometric validation. This can be accomplished by taking advantage of the benefits afforded to local school districts and charter schools through new innovations in technology, research, and professional development currently being implemented by Assessment Technology Incorporated (ATI) in partnership with the educational community. For example, these innovations now make it possible and practical for school districts and charter schools to come together as a community and to realize their goals for developing and deploying reliable, valid, and fair assessments in subject areas not tested on statewide assessments.

Now in its first full year of implementation across several states, the ATI Community Assessment and Item Banking Project is a way for school districts and charter schools to join together at the grass-roots level to develop a continuously expanding community item bank and locally designed “best-fit” reliable and valid assessments in non-state-tested subject areas. As part of the Project, ATI is assisting in the preparation of educators to develop high-quality items and is providing services related to professional development and training, standards-aligned item and assessment development, and data analysis research and reporting tools. As a result, participating districts and charter schools gain direct access to a continually growing repository of shared locally-written, high-quality certified items and customized assessments in areas not currently addressed on statewide tests.

Participants in the Project benefit from the contributions of all participating districts and charter schools as well as the professional development provided by ATI, access to the Galileo Assessment Planner and Galileo Bank Builder technology tools and the research provided by ATI to help ensure the reliability and validity of assessments in non-state-tested subject areas.

What are your thoughts about this initiative? Please leave a comment to this post.

Interested in learning more or participating? Contact us at 1.877.442.5453 or at Galileolnfo@ati-online.com and go to: http://ati-online.com/pdfs/CommunityItemBankingProjectFAQ.pdf

- Jason K. Feld, Ph.D.

ATI Vice President Corporate Projects

Monday, August 27, 2012

ATI Early Literacy Benchmark Assessment Series

What is the Early Literacy Benchmark Assessment Series?

The 2012-2013 Early Literacy Benchmark Assessment Series (EL-BAS) consists of computer-presented assessments aligned to Common Core State Standards in grades kindergarten and first.

The EL-BAS is consistent with the research-based findings and recommendations from national and state panels (e.g., the National Reading Panel, the National Early Literacy Panel, and the Task Force on Reading Assessment established by Arizona HB 2732).

The EL-BAS assesses critical aspects of early literacy as appropriate for each grade level including

• print concepts

• phonological awareness

• phonics and word recognition

• vocabulary acquisition and use

• comprehension of text

For the 2012-2013 school year, three assessments from the Early Literacy Benchmark Assessment Series are available for each grade level. The three assessments are designed to assess the development of early literacy skills throughout the year and cover the standards in a progressively more comprehensive manner. ATI recommends administering these assessments in fall, winter, and spring.

For more information, read Frequently Asked Questions: ATI Early Literacy Benchmark Assessment Series.

The 2012-2013 Early Literacy Benchmark Assessment Series (EL-BAS) consists of computer-presented assessments aligned to Common Core State Standards in grades kindergarten and first.

The EL-BAS is consistent with the research-based findings and recommendations from national and state panels (e.g., the National Reading Panel, the National Early Literacy Panel, and the Task Force on Reading Assessment established by Arizona HB 2732).

The EL-BAS assesses critical aspects of early literacy as appropriate for each grade level including

• print concepts

• phonological awareness

• phonics and word recognition

• vocabulary acquisition and use

• comprehension of text

For the 2012-2013 school year, three assessments from the Early Literacy Benchmark Assessment Series are available for each grade level. The three assessments are designed to assess the development of early literacy skills throughout the year and cover the standards in a progressively more comprehensive manner. ATI recommends administering these assessments in fall, winter, and spring.

For more information, read Frequently Asked Questions: ATI Early Literacy Benchmark Assessment Series.

Monday, August 20, 2012

Reliability and Validity: Key Considerations when Measuring Student Growth

How can pretest reliability be enhanced without lengthening the assessment?

Here at ATI we have created comprehensive pretests and posttests to assist districts and schools in the measurement of student growth over the entire year. Increasingly, districts and schools are using data about student growth in the evaluation of instructional effectiveness. It is important to maximize the reliability of these assessments so that they provide the most precise estimates of student ability and growth. In some cases, the reliability of traditional pretests may be lower than is desirable since students may perform poorly on an assessment composed of items assessing a set of current grade level standards on which they have not yet received any instruction. ATI’s current approach to the design of pretests is to maximize pretest reliability by including on it items that assess current grade-level standards as well as items that assess prior grade level standards on which students previously received instruction. ATI has conducted research examining the effects of the inclusion of items that assess prior grade level standards on the reliability of the assessments. To learn more about the findings of this research, click here to read the research brief.

What would help you be more effective and what tools are you looking for to help provide the data needed for decision making?

We would like to hear from you. Add a comment to share your best practices and how you are using data for decision making.

Learn more about and experience Galileo for yourself. There are a number of ways to learn first-hand about Galileo K-12 Online. You can:

- visit the Assessment Technology Incorporated website

- participate in an online demonstration by registering either through the website or by calling 1.877.442.5453/520.323.9033 to speak with a Field Services Coordinator

- visit us at

- Missouri School Boards’ Association in cooperation with Missouri Association of School Administrators Annual Conference September 28 and 29 at the Tan-Tar-A Resort, Osage Beach, Missouri.

- Massachusetts Computer Using Educators and Massachusetts Association of School Superintendents Annual Technology Conference October 24 and 25 at the Gillette Stadium, Foxborough, Massachusetts.

Labels:

instructional effectiveness

Monday, August 13, 2012

Rating Scales: A New Take

Observation has long been a keystone of teacher evaluation. The evaluator, usually the principal, comes into the classroom with clipboard in hand, fills out the district approved tool, makes some notes, and ultimately generates a score that is used for purposes such as determining bonuses or guiding professional development. Recently many states (including Arizona, Massachusetts, and Colorado) have passed legislation mandating structured observation at least twice during the course of the year.

Given the important role of rating scales, it makes sense to look at how they are typically constructed and used. Many of these instruments that are in use today have been written by a team of experts, pilot tested, and then ultimately adopted. They exist as a single tool.

We think there is a better more flexible way. Rather than constructing a single instrument, we are working to develop a bank of items that can be used to construct customized rating scales, much as we provide the capability to construct customized benchmark assessments that provide targeted information on student mastery of the specific standards that have been a focus of instruction.

Approaching the construction of instructional rating scales in much the same way that we construct assessments for student learning provides a number of advantages. First and foremost is that just as student assessments may be customized, rating scales may be built to reflect the particular interests of a district. If a new professional development program has been implemented, then a tool can be constructed that reflects the specific skills that were emphasized all without losing the ability to compare scores across time with an instrument that has a somewhat different focus. The second advantage of the approach is that scores may be produced that can be used to identify those specific teacher actions in the classroom that lead to better results.

ATI is releasing rating tools that are built using this approach this fall. We look forward to hearing responses from districts as they implement the new approach.

Monday, August 6, 2012

Instructional Effectiveness and Student Growth

With widespread interest regarding the evaluation of instructional effectiveness, many states have enacted new legislation outlining assessment requirements to guide the evaluation process. In particular, there is an increasing focus on including measures of student growth as part of the evaluation framework.

To assist you, and in keeping with the premise of instructional effectiveness (IE) - that good teaching and effective educational management can enhance student learning, the Galileo Instructional Effectiveness Assessment System (IEAS) has been developed. Key to the system are highly reliable IE pretests and posttests, designed to assess student academic progress and growth occurring during the school year. Going further, the IEAS provides data about student achievement and growth derived from advanced statistical analyses including Categorical Growth Analysis. The IEAS also provides additional tools, content, and reports to assist districts in developing effective and defensible educator/administrator evaluation systems.

To explore the Galileo IEAS further, please contact an ATI Field Services Coordinator. We look forward to describing how the Galileo IEAS can be easily incorporated into your local instructional improvement plans to help meet the goals of your state legislation/framework for instructional effectiveness.

Additional Resources:

Innovative Tools: Instructional Effectiveness Assessment System

Frequently Asked Questions and Benefits: Galileo Instructional Effectiveness Assessment System

Instructional Effectiveness White Paper

To assist you, and in keeping with the premise of instructional effectiveness (IE) - that good teaching and effective educational management can enhance student learning, the Galileo Instructional Effectiveness Assessment System (IEAS) has been developed. Key to the system are highly reliable IE pretests and posttests, designed to assess student academic progress and growth occurring during the school year. Going further, the IEAS provides data about student achievement and growth derived from advanced statistical analyses including Categorical Growth Analysis. The IEAS also provides additional tools, content, and reports to assist districts in developing effective and defensible educator/administrator evaluation systems.

To explore the Galileo IEAS further, please contact an ATI Field Services Coordinator. We look forward to describing how the Galileo IEAS can be easily incorporated into your local instructional improvement plans to help meet the goals of your state legislation/framework for instructional effectiveness.

Additional Resources:

Innovative Tools: Instructional Effectiveness Assessment System

Frequently Asked Questions and Benefits: Galileo Instructional Effectiveness Assessment System

Instructional Effectiveness White Paper

Labels:

instructional effectiveness

Monday, July 30, 2012

Deciding on What Types of Assessments to Use in 2012-13

As districts design their assessment plans for the year, there are a number of factors to take into consideration. Answering the following questions can help expedite the process.

1) What subjects and grade levels would your district like to assess for 2012-13?

ATI has math, reading, writing, and science item banks currently available for creating assessments.

2) Does your district want to assess the current standards or the new Common Core State Standards?

ATI has made all sets of item banks available for 2012-13 assessment creation.

3) Does your district have a pacing guide?

A predetermined pacing guide puts your district in a good position to consider ATI’s district-curriculum aligned assessment benchmarks. If not, consider ATI’s comprehensive or state blueprint assessments.

4) Is having decision-making data including data reflecting outcomes from instruction, informing next steps in instruction or predicting how students will do on the state tests the priority for your district?

No matter what your district’s priorities, ATI will create assessments which suit district assessment needs.

5) Does your district have a method for collecting and using valid and reliable data to evaluate teachers to comply with new state laws?

If the answer is “No,” ask your district Field Services Coordinator about ATI’s instructional effectiveness assessments.

6) Would a pretest and posttest benefit teachers and students?

ATI has premade pretests and posttests that are ready and available for the standards for K-12 English language arts, math, and science.

Once you have answered the following questions contact your Educational Management Services or Field Services representative and begin the test creation process. ATI can have premade assessments ready to administer within two weeks and district-curriculum aligned assessments in six weeks.

1) What subjects and grade levels would your district like to assess for 2012-13?

ATI has math, reading, writing, and science item banks currently available for creating assessments.

2) Does your district want to assess the current standards or the new Common Core State Standards?

ATI has made all sets of item banks available for 2012-13 assessment creation.

3) Does your district have a pacing guide?

A predetermined pacing guide puts your district in a good position to consider ATI’s district-curriculum aligned assessment benchmarks. If not, consider ATI’s comprehensive or state blueprint assessments.

4) Is having decision-making data including data reflecting outcomes from instruction, informing next steps in instruction or predicting how students will do on the state tests the priority for your district?

No matter what your district’s priorities, ATI will create assessments which suit district assessment needs.

5) Does your district have a method for collecting and using valid and reliable data to evaluate teachers to comply with new state laws?

If the answer is “No,” ask your district Field Services Coordinator about ATI’s instructional effectiveness assessments.

6) Would a pretest and posttest benefit teachers and students?

ATI has premade pretests and posttests that are ready and available for the standards for K-12 English language arts, math, and science.

Once you have answered the following questions contact your Educational Management Services or Field Services representative and begin the test creation process. ATI can have premade assessments ready to administer within two weeks and district-curriculum aligned assessments in six weeks.

Monday, July 23, 2012

New Video Resources for Galileo K-12 Online

At ATI, we continue to look for ways to simplify the use and enhance the benefits provided by Galileo. Check out our new videos on Common Core State Standards and Instructional Effectiveness. These videos are the latest of a series geared to provide support to school districts transitioning toward new educational initiatives.

ATI supports a district’s adoption and implementation of

Common Core State Standards (video link available in the blue ribbon on the ATI home page) through both the standards aligned comprehensive assessment system and instructional components of Galileo K-12 Online. As the transition to the common core standards continues, ATI is supporting educators through provision of assessments that identify areas of deficiency and that inform decisions concerning instruction in order to ensure standards mastery.

Dr. Andi Fourlis, Assistant Superintendent for the Scottsdale Unified School District shares how the District has created a best practices system for instructional effectiveness (video link available in the blue ribbon on the ATI home page). An ongoing and reflective approach along with the implementation of Galileo K-12 Online are providing the District with data important in guiding both instruction and professional development - keystones in enhancement of student learning.

Click here to view the ATI video series (video link available in the blue ribbon on the ATI home page).

ATI supports a district’s adoption and implementation of

Common Core State Standards (video link available in the blue ribbon on the ATI home page) through both the standards aligned comprehensive assessment system and instructional components of Galileo K-12 Online. As the transition to the common core standards continues, ATI is supporting educators through provision of assessments that identify areas of deficiency and that inform decisions concerning instruction in order to ensure standards mastery.

Dr. Andi Fourlis, Assistant Superintendent for the Scottsdale Unified School District shares how the District has created a best practices system for instructional effectiveness (video link available in the blue ribbon on the ATI home page). An ongoing and reflective approach along with the implementation of Galileo K-12 Online are providing the District with data important in guiding both instruction and professional development - keystones in enhancement of student learning.

Click here to view the ATI video series (video link available in the blue ribbon on the ATI home page).

Experience Galileo for yourself. There are a number of ways to learn first-hand about Galileo K-12 Online. You can: visit the Assessment Technology Incorporated website, participate in an online demonstration by registering either through the website or by calling 1.877.442.5453 to speak with a Field Services Coordinator, or visit us at the following events

- Colorado Association of School Executives Conference July 23 through 27 at the Beaver Run Resort, Breckenridge, Colorado;

- Missouri School Boards’ Association in cooperation with Missouri Association of School Administrators Annual Conference September 28 and 29 at the Tan-Tar-A Resort, Osage Beach, Missouril; and

- Massachusetts Computer Using Educators and Massachusetts Association of School Superintendents Annual Technology Conference October 24 and 25 at the Gillette Stadium, Foxborough, Massachusetts.

Monday, July 16, 2012

By Your Side

In this ever changing educational landscape, ATI remains dedicated to continue an unwavering track record of technological innovations that began in 1986 and that support educational initiatives. A recent major change in the educational landscape was the June 2, 2010 release for state adoption of Common Core State Standards. In order to support and help facilitate the goals of school districts in transitioning toward the full implementation of Common Core Standards, ATI has been actively engaged in continuous assessment innovations, content development, reporting innovations, research, and professional development activities. Assessment item innovations include performance based and technology enhanced item types for assessing Common Core skills.

Another recent and major change in the educational landscape is the emphasis on instructional effectiveness. Instructional effectiveness mandates across the country tend to contain requirements for inclusion of reliable and valid measurement of student growth, documentation of teacher and administrator skills, and a reporting mechanism for this data that provides a single score for each educator. In order to support and help facilitate the goals of school districts in meeting instructional effectiveness guidelines, ATI has been developing technology to compile categorical data in a manner that provides a single score for each educator. Two years ago, ATI began the development of instructional effectiveness tools. One example can be found in the secure pretest and posttest offerings for language arts and mathematics. This part of the initiative was provided as a pilot to a large group of districts during the 2011-2012 academic year. The pilot was very successful and proved capable of providing districts with tools to measure student growth in these two areas with reliable and valid assessments. The tools will be available to all clients for the upcoming academic year. Another component of the ATI instructional effectiveness initiative is the Community Item Banking and Assessment Development Project. This project is a way for districts to develop and share assessment items and assessments for non-state-tested subjects and grade levels. Participating districts in the project are provided technology, services, and professional development related to item writing. ATI is also providing supporting technology and expert services related to assessment development in addition to conducting data analyses to establish psychometric properties of items and to evaluate the validity, reliability, and fairness of the resulting assessments. Participating districts are being provided access to a continually growing repository of shared district-written, high-quality items and customized assessments in areas not currently addressed on statewide tests.

With ATI staff by your side, you will receive a full instructional improvement system continually evolving in response to changing federal and state requirements, client needs, ongoing research in educational assessment and instruction, and advances in technology.

Another recent and major change in the educational landscape is the emphasis on instructional effectiveness. Instructional effectiveness mandates across the country tend to contain requirements for inclusion of reliable and valid measurement of student growth, documentation of teacher and administrator skills, and a reporting mechanism for this data that provides a single score for each educator. In order to support and help facilitate the goals of school districts in meeting instructional effectiveness guidelines, ATI has been developing technology to compile categorical data in a manner that provides a single score for each educator. Two years ago, ATI began the development of instructional effectiveness tools. One example can be found in the secure pretest and posttest offerings for language arts and mathematics. This part of the initiative was provided as a pilot to a large group of districts during the 2011-2012 academic year. The pilot was very successful and proved capable of providing districts with tools to measure student growth in these two areas with reliable and valid assessments. The tools will be available to all clients for the upcoming academic year. Another component of the ATI instructional effectiveness initiative is the Community Item Banking and Assessment Development Project. This project is a way for districts to develop and share assessment items and assessments for non-state-tested subjects and grade levels. Participating districts in the project are provided technology, services, and professional development related to item writing. ATI is also providing supporting technology and expert services related to assessment development in addition to conducting data analyses to establish psychometric properties of items and to evaluate the validity, reliability, and fairness of the resulting assessments. Participating districts are being provided access to a continually growing repository of shared district-written, high-quality items and customized assessments in areas not currently addressed on statewide tests.

With ATI staff by your side, you will receive a full instructional improvement system continually evolving in response to changing federal and state requirements, client needs, ongoing research in educational assessment and instruction, and advances in technology.

Monday, July 9, 2012

Assessment Component in Galileo K-12 Online

Assessment is a central component of the Galileo K-12 Online Instructional Improvement System (IIS). The purpose of the Galileo IIS is to provide management tools that can assist educators to promote student learning. The comprehensive assessment component includes both benchmark and formative assessments along with many other forms of assessment, including placement, computerized adaptive testing, and instructional effectiveness assessments.

Galileo’s assessment component is intended to inform instruction aimed at promoting the mastery of standards reflecting valued educational goals. Currently the standards selected to guide instruction are generally state, local, or in some cases Common Core State Standards. If benchmark and formative assessments are to be effective in informing instruction, it is essential that the relevant standards be reflected in item construction and assessment design. Assessing students using items that do not reflect the standards in use by the district brings to question the validity and accountability of the assessment system and the student achievement data it provides.

As Haladyna notes,

Haladyna, T.M. (2004). Developing and validating multiple-choice test items. Mahwah, NJ. Lawrence Erlbaum Associates, Inc.

Galileo’s assessment component is intended to inform instruction aimed at promoting the mastery of standards reflecting valued educational goals. Currently the standards selected to guide instruction are generally state, local, or in some cases Common Core State Standards. If benchmark and formative assessments are to be effective in informing instruction, it is essential that the relevant standards be reflected in item construction and assessment design. Assessing students using items that do not reflect the standards in use by the district brings to question the validity and accountability of the assessment system and the student achievement data it provides.

As Haladyna notes,

The use of a test that is poorly aligned with the state’s curriculum and content standards, coupled with test-based accountability, results in test scores that may not be validly interpreted or used. (Haladyna, 2004 pp.9-10).The Galileo assessment component is effective in informing instruction and developing assessments because it:

- adheres to industry standards for item construction and assessment design;

- provides information on student mastery of standards;

- provides recommendations on what to teach next;